What is Agentic RAG?

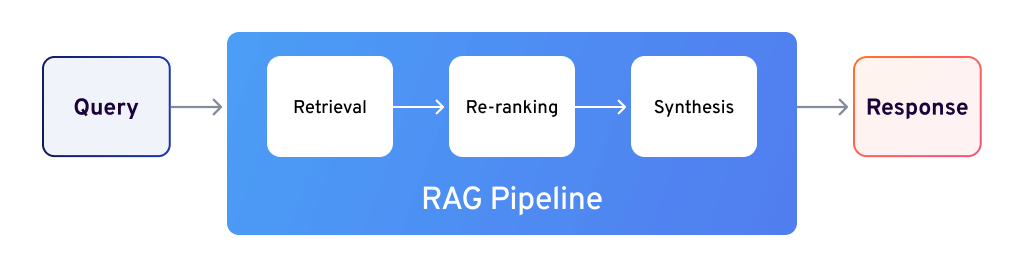

AI systems are powerful, but hallucinate and sometimes generate incorrect or misleading information. Retrieval Augmented Generation (RAG) is a solution to enhance language model accuracy, which pulls data from external sources like documents and databases to give more accurate answers. Traditional RAG works well for simple queries but struggles with complex tasks that require multi-step reasoning, tool usage or querying across multiple sources.

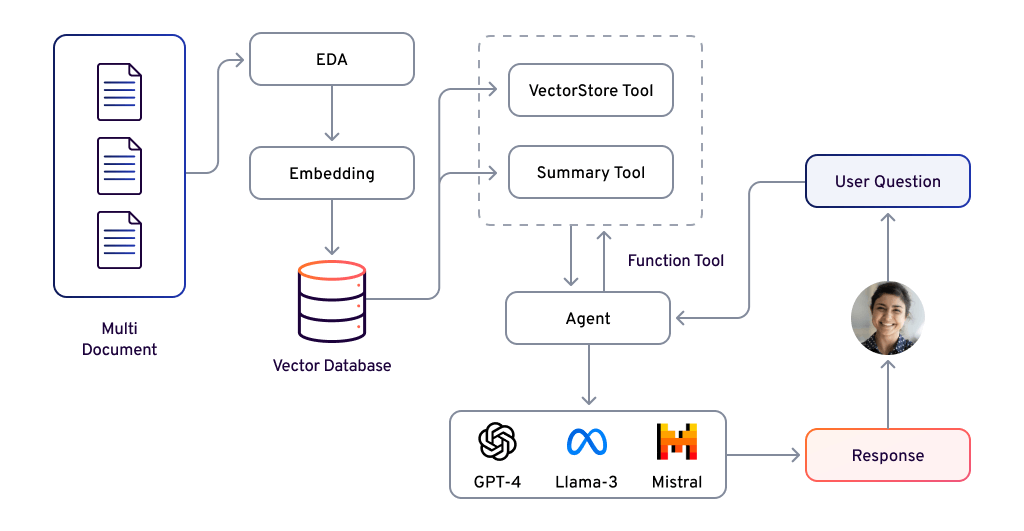

Agentic RAG or RAG Agent takes this to the next level by introducing AI agents into the RAG pipeline. These agents act as autonomous decision makers that not only retrieve information but also plan actions, coordinate tools, and refine answers. This turns the LLM from a passive responder into an active problem solver.

With Agentic RAG systems can do things like compare data across documents, summarise long content, or generate follow-up questions dynamically and intelligently. In short, Agentic RAG brings structure, reasoning, and deeper context to retrieval-based AI, making it perfect for complex real-world applications.

Fundamentals of Agentic RAG

Agentic RAG describes an AI agent-based implementation of RAG. Before we proceed, let’s quickly recap the fundamental concepts of RAG and AI agents.

What is RAG?

Retrieval Augmented Generation, aka RAG, is an advanced AI framework designed to optimize the output of large language models by leveraging a mix of external and internal information during answer generation.

At its core, RAG operates through a two-phase process: initially, it retrieves a set of relevant documents or sections from an extensive database using a retrieval system based on dense vector representations. These mechanisms, ranging from text-based semantic search models like Elasticsearch to numeric-based vector embeddings, enable efficient storage and retrieval of information from a vector database.

For domain-specific AI agents, incorporating domain-specific LLM knowledge and external knowledge sources is crucial in enhancing the retrieval accuracy of RAG, especially in adapting it to a variety of tasks and answering highly specific questions.

Once the relevant information is retrieved, RAG incorporates this data, including proprietary content such as emails, corporate documents, and customer feedback, to generate responses. This integration allows RAG to produce highly accurate and contextually relevant answers tailored to specific organizational needs.

What are AI Agents in Agentic AI Systems

AI agents are autonomous software programs that perceive their environment through sensors and act on it using actuators. They operate independently without human intervention and execute tasks by leveraging advanced technologies such as machine learning, Natural Language Processing (NLP), large language models (LLMs), or Foundation Models (FMs).

Agentic AI systems excel at solving complex problems by decomposing queries, planning task sequences, and employing an AI reasoning engine akin to human thought. They can handle ambiguous questions and utilize various external tools such as APIs, programs, and web searches to execute tasks and find solutions effectively.

Agentic RAG vs Traditional RAG Systems

From traditional Retrieval-Augmented Generation systems to Agentic RAG systems is a big step in AI-driven information retrieval and decision-making. Traditional RAG systems rely on static processes like manual prompt engineering, rigid retrieval strategies, and predefined rules for response generation.

Agentic RAG systems bring in dynamic adaptability with AI agents to optimize retrievals, multi-step reasoning, and context-aware decisions. This reduces overhead, increases efficiency, and enables real-time adaptability which solves many of the problems of traditional approaches.

| Feature | Traditional RAG Systems | Agentic RAG Systems |

| Prompt engineering | Relies heavily on manual prompt engineering and optimization techniques. | Can dynamically adjust prompts based on context and goals, reducing reliance on manual prompt engineering. |

| Static nature | Limited contextual awareness and static retrieval decision-making. | Considers conversation history and adapts retrieval strategies based on context. |

| Overhead | Unoptimized retrievals and additional text generation can lead to unnecessary costs. | Can optimize retrievals and minimize unnecessary text generation, reducing costs and improving efficiency. |

| Multi-step complexity | Requires additional classifiers and models for multi-step reasoning and tool usage. | Handles multi-step reasoning and tool usage, eliminating the need for separate classifiers and models. |

| Decision-making | Static rules govern retrieval and response generation. | Decide when and where to retrieve information, evaluate retrieved data quality, and perform post-generation checks on responses. |

| Retrieval process | Relies solely on the initial query to retrieve relevant documents. | Perform actions in the environment to gather additional information before or during retrieval. |

| Adaptability | Limited ability to adapt to changing situations or new information. | Can adjust its approach based on feedback and real-time observations. |

RAG Agents more adaptable by dynamically optimizing prompt engineering and retrieval strategies based on context. Traditional RAG systems rely on static processes and manual optimization. Also, Agentic RAG systems handle multi-step reasoning and tool integration by themselves, with no need for auxiliary classifiers and models in traditional RAG systems.

Deep Dive into the Components of Agentic RAG

Key Agents in the Agentic RAG Pipeline

1. Routing Agents

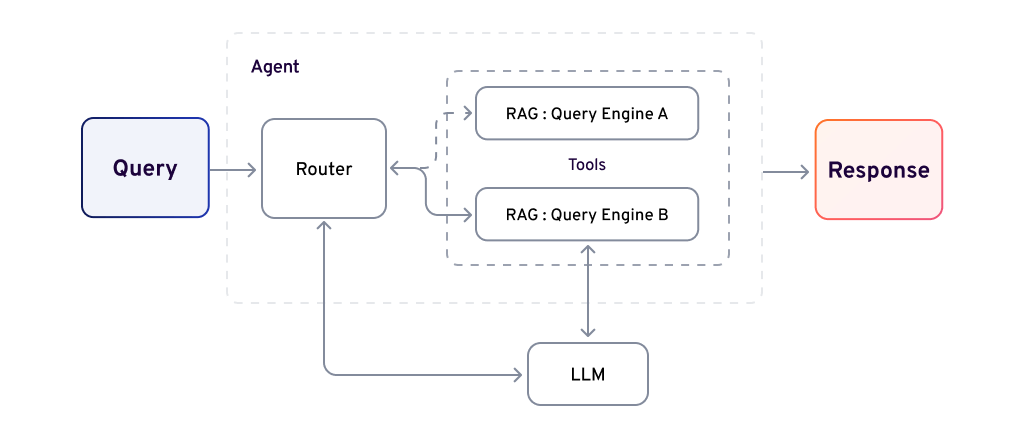

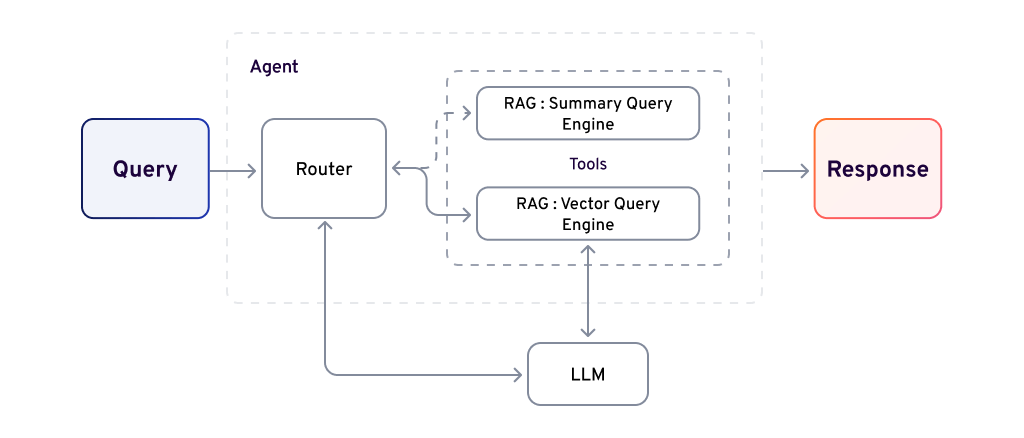

The routing agent leverages a Large Language Model to analyze the input query and determine the most appropriate downstream RAG pipeline. This process exemplifies agentic reasoning at its core, as the LLM evaluates the query to make an informed and strategic pipeline selection.

Another routing approach involves selecting between summarization and question-answering RAG pipelines. The agent analyzes the input query to determine whether to route it to the summarization engine or the vector query engine, each configured as a specialized tool.

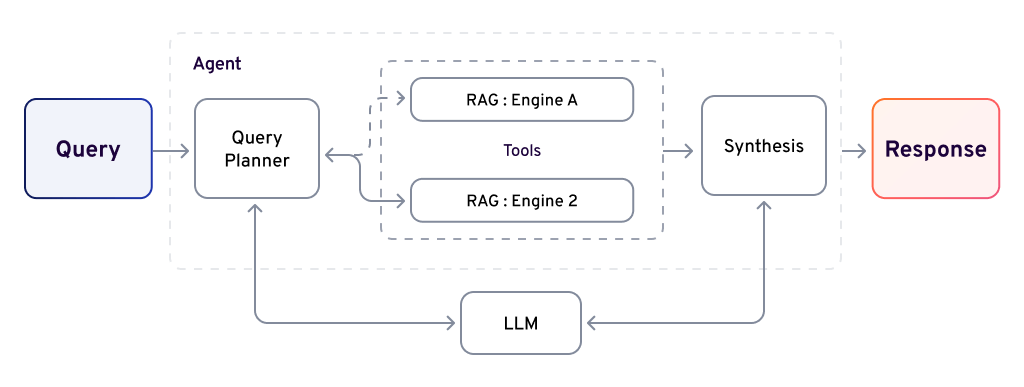

2. Query Planning Agent

The query planning agent deconstructs a complex query into smaller, parallelizable subqueries, distributing them across various RAG pipelines tailored to different data sources. Utilizing multiple agents, the responses from these pipelines are then combined into a cohesive final output. In essence, query planning involves breaking the query into manageable subqueries, processing each through appropriate RAG pipelines, and synthesizing the results into a unified response.

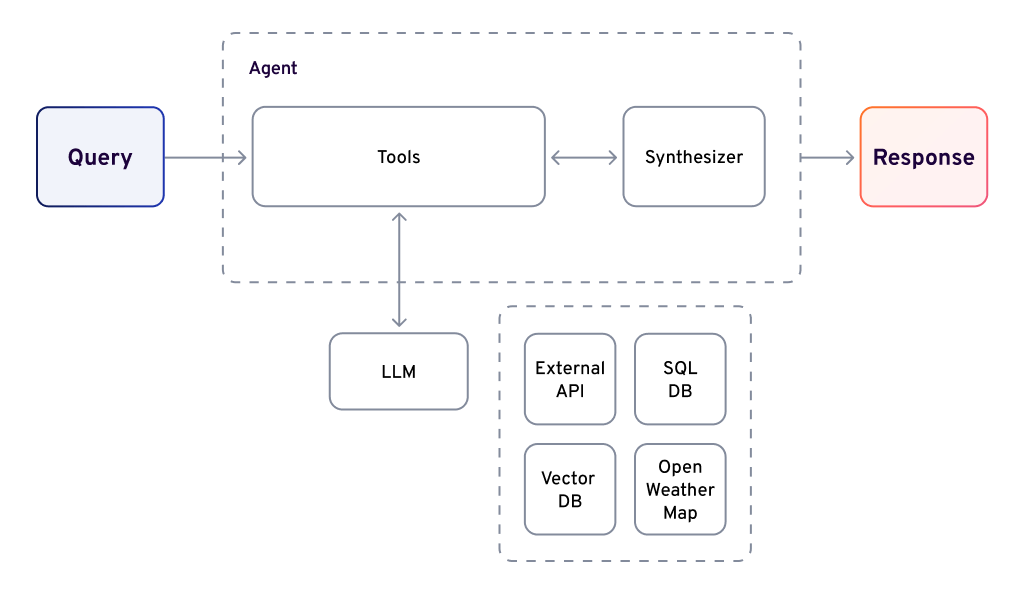

3. Tool Use Agent

In a standard RAG setup, a query retrieves the most relevant documents that semantically align with it. However, some scenarios require supplementary data from external sources like APIs, SQL databases, or applications with API interfaces. This additional data provides context to refine the input query before it is processed by the LLM. In such situations, the agent can leverage a RAG tool specification.

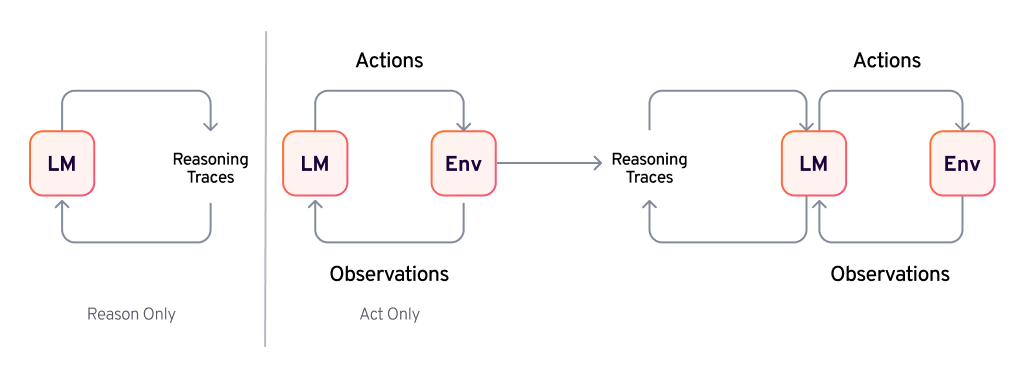

4. ReAct Agent

Advancing to a higher level involves combining reasoning and iterative actions to handle more complex queries. This approach integrates routing, query planning, and tool usage into a single workflow. A ReAct agent is designed to manage sequential, multi-part queries while maintaining context in memory.

Here’s how the process works:

- Processing the Query: The agent analyzes the user’s input to determine whether a tool is needed and gathers the required information.

- Using the Tool: It invokes the appropriate tool with the inputs and stores the output.

- Evaluating the Results: The agent reviews the tool’s history, including inputs and outputs, to determine the next step.

- Iterative Steps: This process repeats until all tasks are completed and the agent responds to the user.

This method allows the agent to handle queries requiring multiple steps and actions efficiently.

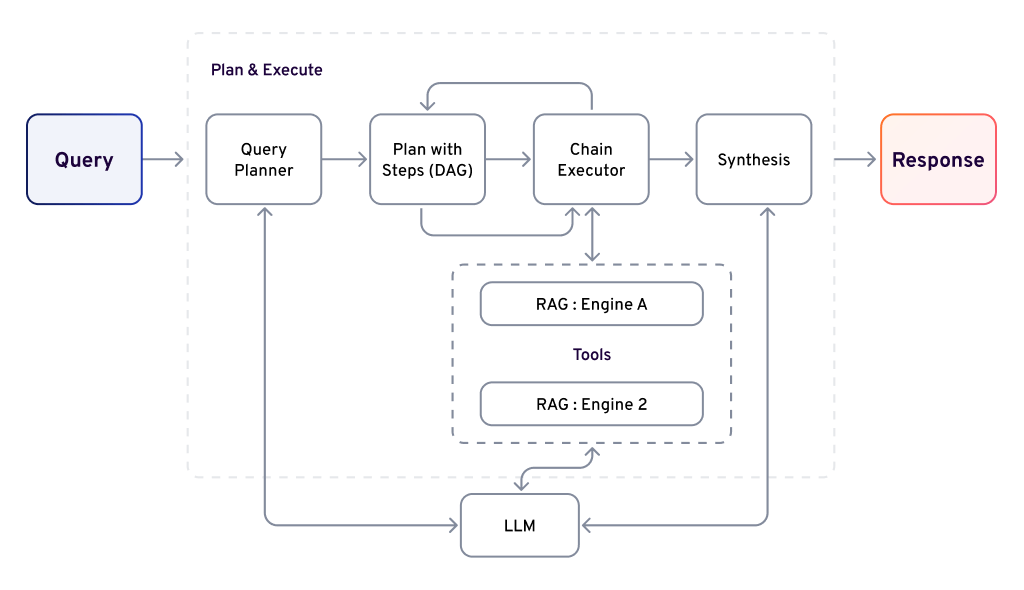

5. Dynamic Planning and Executing Agent

ReAct remains the most widely adopted agent, but the increasing complexity of user intents has highlighted the need for more advanced capabilities. With the growing use of agents in production environments, there is a rising demand for improved reliability, observability, parallel processing, control, and clear separation of responsibilities. Key priorities include long-term planning, execution transparency, efficiency improvements, and reduced latency.

At its core, these advancements focus on separating high-level planning from immediate execution. The approach involves:

- Defining the steps needed to execute an input query, essentially creating a computational graph or directed acyclic graph (DAG).

- Identifying the tools, if required, to carry out each step and executing them with the necessary inputs.

This requires both a planner and an executor. The planner, often powered by a large language model (LLM), designs a detailed step-by-step plan based on the user query. The executor then performs each step, determining the appropriate tools and inputs for the tasks. This iterative process continues until the plan is fully executed, culminating in the final response.

6. Multi-Agent Systems

Multi-agent systems enhance complex problem-solving capabilities within retrieval-augmented generation (RAG) frameworks. Different agents, each equipped with specific knowledge and skills, collaborate to address intricate queries.

Agentic AI Copilots leverage multi-agent systems to facilitate communication and task execution among agents, showcasing the advantages of using multiple agents to create a more complex and efficient information retrieval system.

Agentic RAG Implementation Frameworks

Implementing Agentic Retrieval-Augmented Generation (RAG) involves utilizing frameworks integrating intelligent agents to enhance information retrieval and generation processes. Several frameworks facilitate the development of Agentic RAG systems:

1. CrewAI

Overview: CrewAI is an open-source framework designed to orchestrate role-based AI agents, enabling them to collaborate effectively to achieve complex goals.

Features:

- Role-Based Agent Design: Allows the creation of agents with specific roles and goals, facilitating structured collaboration.

- Task Management: Supports the definition and dynamic assignment of tasks to agents.

- Inter-Agent Delegation: Enables agents to delegate tasks among themselves, enhancing flexibility and efficiency.

2. AutoGen

Overview: AutoGen is a versatile framework developed by Microsoft for building conversational agents. It treats workflows as conversations between agents, making it intuitive for users who prefer interactive interfaces.

Features:

- Conversational Workflow: Models workflows as conversations, facilitating natural interactions.

- Tool Integration: Supports various tools, including code executors and function callers, allowing agents to perform complex tasks autonomously.

- Customizability: Enables users to extend agents with additional components and define custom workflows.

3. LangChain

Overview: LangChain is a framework designed to build applications powered by large language models (LLMs). It offers components for integrating LLMs with external data sources and tools, enabling the creation of agentic RAG systems.

Features:

- Agent Modules: Facilitate the development of agents capable of reasoning and interacting with various tools.

- Tool Integration: Supports the incorporation of external tools, such as APIs and databases, into the agentic workflows.

- Prompt Management: Provides utilities for constructing and managing prompts to guide agent behavior.

4. LlamaIndex

Overview: LlamaIndex is a framework that simplifies the connection between LLMs and external data sources, structuring information to facilitate efficient querying.

Features:

Indexing and Storage: Organizes documents into structures optimized for retrieval.

- Query Interface: Provides a seamless interface for querying indexed data using LLMs.

- Integration Capabilities: Supports integration with various data sources and retrieval mechanisms.

5. LangGraph

Overview: LangGraph is a framework that combines language models with graph-based data structures, enabling advanced reasoning and retrieval capabilities.

Features:

- Graph-Based Reasoning: Utilizes graph structures to represent and reason over complex relationships.

- Agent Integration: Supports the development of agents that can navigate and query graph-based data.

- Scalability: Designed to handle large-scale data and comply.

These frameworks offer diverse approaches to implementing agentic RAG systems, each with unique features tailored to different application needs. Selecting the appropriate framework depends on specific project requirements, such as the complexity of tasks, scalability needs, and integration capabilities.

Key Features and Benefits of Agentic RAG

- Orchestrated Question-Answering: Agentic orchestration streamlines the RAG system’s question-answering process by breaking it into smaller, manageable tasks, assigning specialized agents to each, and ensuring smooth coordination for optimal outcomes.

- Goal-Driven Interactions: Agents are designed to recognize and pursue specific objectives, enabling them to handle more complex and meaningful queries.

- Advanced Planning and Reasoning: The framework’s agents excel in multi-step planning and reasoning, determining the most effective strategies for retrieving, analyzing, and synthesizing information to answer intricate questions.

- Tool Utilization and Adaptability: Agentic RAG agents seamlessly integrate external tools and resources, such as search engines, databases, and APIs, to enhance their ability to gather and process information.

- Context Awareness: These systems factor in user preferences, past interactions, and situational context to make informed decisions and take appropriate actions.

- Continuous Learning: Intelligent agents in the framework learn and evolve over time. Their external knowledge source expands with new challenges and data, improving their capacity to address increasingly complex questions.

- Customizability and Flexibility: Agentic RAG offers unparalleled adaptability, enabling customization for specific tasks, industries, and domains. Agents and their functionalities can be tailored to meet unique requirements.

- Enhanced Accuracy and Efficiency: By combining the power of large language models (LLMs) with agent-based systems, Agentic RAG delivers superior accuracy and reduces AI hallucination compared to traditional methods.

- Unlocking New Possibilities: This technology paves the way for innovative applications across diverse sectors, including personalized assistants, advanced customer service solutions, and beyond.

Agentic RAG offers a powerful and flexible solution for advanced question-answering. By leveraging the combined capabilities of intelligent agents and further data retrieval, it effectively addresses complex information challenges. Its strengths in planning, reasoning, tool integration, and continuous learning make it a transformative innovation in achieving accurate and reliable knowledge retrieval.

Practical Applications Agentic RAG

Enterprise-Level Use Cases

Customer Support Transformation – Agentic RAG revolutionizes customer support by delivering hyper-personalized, real-time assistance. For example:

Dynamic Problem Solving: A telecom support bot identifies a customer’s issue, retrieves their billing and technical history, and provides an accurate resolution in one seamless interaction by accessing and comparing information from multiple documents.

AI Copilots: AI copilot powered by Agentic RAG assist professionals in industries like finance, legal, and healthcare by:

- Summarizing case studies and reports.

- Generating tailored recommendations based on user inputs.

- Incorporating new data dynamically during interactions.

Industry-Specific Advantages

- Healthcare – Agentic RAG assists in diagnostic support by integrating patient records with the latest medical research. Physicians receive suggestions tailored to individual patient profiles, reducing diagnostic errors.

- Finance – In fraud detection, Agentic RAG identifies suspicious activities by reasoning over vast transaction histories, combining real-time data retrieval with contextual risk analysis.

- E-Commerce – Agentic RAG enhances personalized shopping experiences by dynamically recommending products based on real-time browsing behavior and historical preferences.

Implementation Strategies for Agentic RAG

Agentic RAG integrates retrieval mechanisms with generative models to boost AI performance in tasks requiring accurate information recall and coherent response generation. Here’s a streamlined step-by-step guide:

1. Define Objectives

- Identify Use Cases: Determine where Agentic RAG adds value (e.g., chatbots, content generation, knowledge retrieval).

- Set Goals: Establish clear targets like improving response accuracy or relevance.

2. Choose Components

- Retrieval System: Select models like BM25 or Dense Passage Retrieval for document fetching.

- Generative Model: Use language models such as GPT or BERT to generate context-aware responses.

3. Prepare Data

- Collect Data: Gather documents for the retrieval system to access.

- Preprocess Data: Clean, tokenize, and normalize data for compatibility.

4. Build the Retrieval Component

- Indexing: Create an efficient index of the document collection.

- Query Processing: Develop methods to convert user queries into retrievable formats.

5. Integrate Retrieval and Generation

- Pipeline Setup: Route queries through the retrieval system, fetching relevant documents.

- Response Generation: Pass retrieved documents and queries to the generative model for context-rich outputs.

6. Fine-Tune the System

- Train Models: Fine-tune the generative AI using datasets with paired queries and contextually relevant responses.

- Evaluate: Continuously assess and improve model accuracy and coherence with LLM evaluation.

7. Implement Feedback Loops

- User Feedback: Collect insights to refine responses.

- Retraining: Update models using new data and feedback for consistent performance.

8. Deploy the System

- API Development: Build APIs for seamless integration with external applications.

- Monitoring: Use tools to track system performance and address potential issues.

Key Considerations

- Ethics and Transparency: Train on diverse datasets to reduce biases, ensure users understand how responses are generated, and maintain trust.

- Continuous Improvement: Update models and workflows regularly to incorporate advancements and evolving user needs.

By following these steps, you can effectively deploy an Agentic RAG system to elevate your AI applications while addressing challenges like data quality, user expectations, and scaling in agentic AI.

Key Challenges in Deploying Agentic RAG Systems

Implementing Agentic Retrieval-Augmented Generation comes with several challenges. Here are some key hurdles to consider:

1. Data Quality and Availability:

With inconsistent data sources, ensuring that the retrieved information comes from reliable and up-to-date sources can be difficult. Also, the need for extensive preprocessing of data to ensure compatibility with both retrieval and generation components can be time-consuming.

2. Integration Complexity

Integrating different models (retrieval and generation components) requires careful consideration of their compatibility and interaction with the systems. Also, sometimes setting up a seamless pipeline that efficiently handles data flow between components can be technically challenging.

3. Performance Optimization

Balancing the speed of retrieval with the quality of generated responses can be tricky, especially in real-time applications. As the data collection grows, ensuring that the retrieval system remains efficient and responsive is crucial.

4. Model Fine-tuning

Fine-tuning LLMs on relevant datasets can require significant computational resources and expertise. Learn more about RAG vs Fine-tuning LLM in this article.

5. User Interaction and Feedback

It is important to accurately interpret user queries to ensure relevant documents are retrieved can be complex, especially with ambiguous language. Also, establishing effective mechanisms for capturing user feedback to continuously improve the system can be challenging.

6. Ethical and Bias Considerations

If the training data contains biases, the model may produce unfair responses, necessitating ongoing monitoring and adjustments. It is also crucial to ensure that users understand how information is retrieved and generated is essential for building trust but can be difficult to achieve.

7. Regulatory Compliance

Adhering to regulations, like GDPR, regarding data usage, especially when handling personal or sensitive information, poses challenges. Ethical considerations and regulatory compliance are very crucial to take care of.

8. Maintenance and Updates

Regularly updating models and datasets to keep up with changes in information and user needs requires ongoing effort and resources. Over time, maintaining the system’s architecture and ensuring that it adapts to new technologies can become a challenge.

Addressing these challenges can help organizations enhance the effectiveness of their Agentic RAG implementations and maximize their potential benefits. Thus, joining hands with professional services can be a great idea for your business.

The Future of Agentic RAG: Emerging Trends to Watch

Agentic RAG is on a trajectory to revolutionize AI-driven information retrieval and generation. Its future is shaped by innovative trends poised to redefine its capabilities and applications. Here’s a glimpse into what’s ahead:

1. Enhanced Multimodal Capabilities

- Seamless Data Integration: The next generation of RAG systems will combine multimodal data—text, images, and audio—for richer, context-aware responses.

- Textual and Visual Data Fusion: Imagine virtual assistants or educational tools that retrieve images and generate descriptive text simultaneously, creating a more immersive experience.

2. Improved Personalization

- Tailored Responses: Future RAG systems will harness user-profiles and preferences to deliver hyper-personalized answers, elevating user engagement.

- Adaptive Learning: These systems will refine themselves dynamically, learning from individual user interactions to offer increasingly relevant responses.

3. Advanced Retrieval Techniques

- Smarter Algorithms: Breakthroughs in retrieval methods, like transformer-based architectures, will make document fetching faster and more precise.

- Knowledge Graphs: Enriching retrieval with knowledge graphs will connect concepts and provide deeper contextual understanding.

4. Greater Focus on Explainability

- Transparent AI: As demand for ethical AI grows, RAG models will prioritize explainability, showing users how decisions are made and responses generated.

- Building Trust: By making AI’s inner workings clear, users will have greater confidence in its outputs.

5. Ethical AI Practices

- Bias Reduction: Future efforts will focus on identifying and mitigating biases in training data to ensure fairness.

- Content Moderation: Robust filters will prevent misinformation and harmful content, ensuring responsible information generation.

6. Cloud and Edge Computing

- Decentralized Deployment: Edge computing will bring RAG closer to users, reducing latency and enhancing responsiveness in mobile and IoT applications.

- Scalable Solutions: Cloud technologies will power RAG systems, enabling efficient handling of massive datasets and complex models.

7. Continuous Learning and Adaptation

- Real-Time Updates: RAG systems will evolve on the fly, integrating new data and user feedback to stay current.

- Dynamic Context Understanding: These systems will adapt seamlessly to changing user needs and real-world scenarios.

8. Industry-Specific Applications

- Sector-Driven Innovation: Expect tailored RAG solutions in industries like healthcare (clinical support), finance (risk analysis), and education (learning tools).

- Cross-Industry Collaboration: Shared best practices and datasets will accelerate advancements across sectors.

10. Integration with Advanced AI Technologies

- Conversational AI Synergy: Combining RAG with conversational AI will result in highly engaging and insightful interactions.

- Augmented Intelligence: Merging RAG with predictive analytics and sentiment analysis will offer comprehensive, real-world solutions.

Conclusion: Agentic RAG’s Promise

The future of Agentic RAG is rich with opportunity. As these trends mature, RAG systems will become more intelligent, ethical, and adaptable. From reshaping how we retrieve information to enhancing cross-industry collaboration, the impact of Agentic RAG will be transformative, paving the way for a smarter and more connected future.

Aisera is leading the enterprise Agentic AI revolution with a comprehensive, enterprise-grade platform built on the core principles of modularity, scalability, interoperability, and reinforced learning. By seamlessly integrating with existing enterprise systems, Aisera provides a smooth path to unlocking new possibilities and the full potential of enterprise Agentic AI. Book a custom AI demo to experience the power of Agentic AI in action.