What is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open standard introduced by Anthropic in late 2024 that establishes a universal language for connecting Large Language Models (LLMs) to external data systems and tools. By replacing brittle, custom integrations with a unified interface, MCP serves as the foundational infrastructure for scalable agentic AI, enabling autonomous systems to securely access and act upon enterprise data across disparate environments.

The “USB-C” for Enterprise AI

To understand the architectural significance of MCP, industry experts compare it to “USB-C for AI applications.”

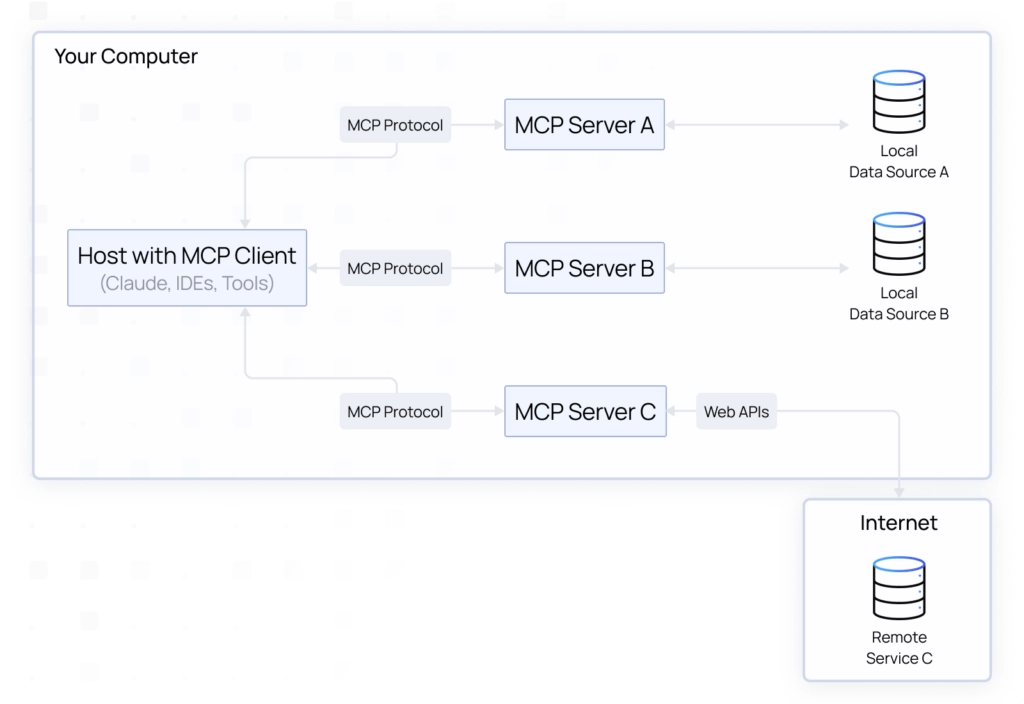

In the current landscape, connecting agentic AI workflows to platforms like ServiceNow, Salesforce, or a proprietary SQL database requires a bespoke API integration for every single tool. This creates a complex “many-to-many” integration nightmare. MCP solves this fragmentation by standardizing the connection flow into a unified protocol:

- Standardized Connectivity: Just as USB-C allows a hard drive to connect to any laptop regardless of brand, MCP eliminates the need for custom “glue code,” allowing models to swap tools effortlessly.

- The MCP Architecture: The protocol relies on distinct components working in tandem, specifically, the AI client (the interface) connecting directly to MCP servers (the specialized units that expose data or capabilities). This architecture ensures that data sources remain secure while becoming instantly accessible to the AI.

Why Do We Need MCP?

Fragmentation in AI Tooling

Currently, integrating an AI assistant with multiple data sources or tools means dealing with countless unique APIs. This leads to an N×M complexity problem, where N different AI systems interact with M tools, each integration being unique and costly.

MCP simplifies this by offering a standard integration method: implement once, reuse everywhere. This reduces development overhead and accelerates innovation, making systems flexible and scalable. Before USB-C, each device required unique connectors (VGA, serial ports, proprietary cables).

USB-C standardized these connections, allowing any compatible device to plug in effortlessly. MCP does the same for AI tooling, fostering a “plug-and-play” ecosystem for AI integration.

MCP Architecture: Prompts, Tools, and Resources

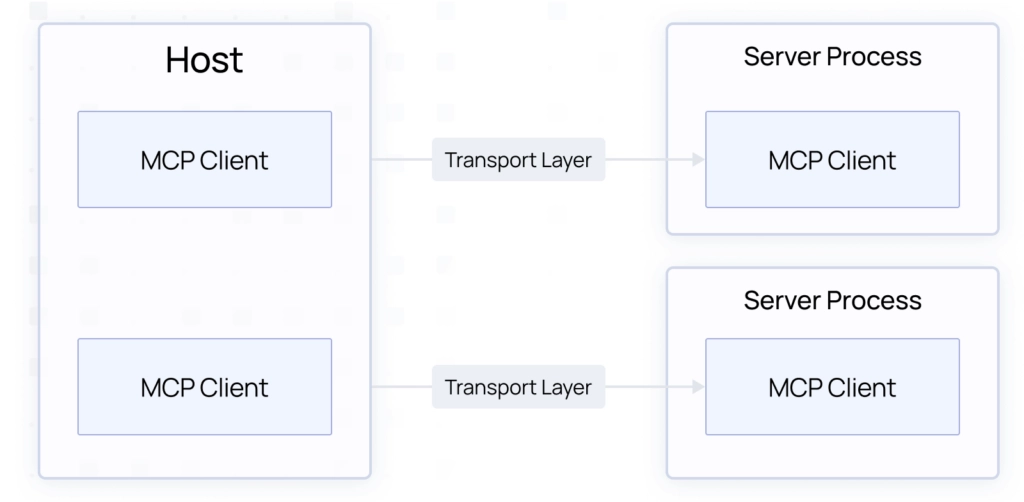

MCP follows a simple client-server architecture. The AI application (for example, a chat assistant or an AI-powered IDE) acts as the MCP client, and it connects to one or more MCP servers which expose some capability or data. Under the hood, MCP uses a JSON-RPC based message protocol to facilitate requests and responses between clients and servers.

- Hosts are LLM applications (like Claude Desktop or IDEs) that initiate connections

- Clients maintain 1:1 connections with servers inside the host application

- Servers expose capabilities via three standardized types:

- Prompts: Prompts encapsulate reusable conversational workflows or templates. They provide AI applications with pre-defined structures or patterns for engaging in conversations or generating specific types of responses.

- Tools: Tools represent functions or actions that the AI application can invoke dynamically. They enable the AI to perform specific tasks or operations, such as data analysis, language translation, or image generation, by interacting with external services or APIs.

- Resources: Resources encompass static data that is offered to the AI application for contextual reference. This data can include files, database entries, or other relevant information that the AI can utilize to enhance its understanding or generate more informed responses.

How Does MCP Work?

When an MCP client connects to a server, it first establishes a connection. The server advertises which of the above capabilities it supports (it might support only tools, or tools+resources, etc.).

The client and server then speak a common JSON-RPC protocol for exchanging messages. For instance, to use a tool, the client will send a JSON request {“method”: “tools/call”, “params”: {name: “…”, input: {…}}} and receive a response with the result.

To fetch a resource, the client might call {“method”: “resources/read”, “params”: {“uri”: “…”}}.

All of this is abstracted by the MCP SDKs, so as a developer, you can register Python functions as tools or define resources easily in code. The beauty of MCP’s architecture is that once an AI host (like Claude, ChatGPT, an IDE, etc.) implements an MCP client, it can interface with any MCP server. Likewise, if you build an MCP server for, say, Jira (project tickets), any MCP-compatible AI app can now use Jira data, not just the one you initially targeted. This decoupling through a standard protocol is what enables a “write once, use anywhere” ecosystem.

In summary, MCP’s architecture introduces a common language for AI tools: servers expose Prompts, Resources, and Tools in a consumable format, and clients invoke those as needed. By standardizing these patterns, MCP makes it much easier to compose complex AI workflows. An AI agent can mix and match multiple MCP servers (e.g., one server provides relevant documents as resources, another server provides a calculator tool, another offers an email-sending tool), all in the same conversation. This composability and reusability are a core goal of MCP. The next section will illustrate a concrete example of how an MCP-based multi-agent system might work in practice.

MCP in Action: Aisera Status Monitoring Example

Consider an AI assistant for checking the Aisera service status and incidents:

- Status Server: Provides real-time system status as a tool

- Incidents Server: Offers incident history lookup

- Details Server: Delivers specific incident information

When asked, “What is the status of aisera.com services?” OR “Has Aisera had any maintenance incidents recently?”, the AI seamlessly invokes the right service to retrieve and present this information.

Simple Code Example (Python)

# Server-side Example

from mcp.server.fastmcp import FastMCP

from mcp.server.fastmcp.prompts import base

# Initialize server

mcp = FastMCP(“aisera-status-server”)

@mcp.tool()

async def get_aisera_status() -> str:

“””Get the current status of all Aisera services.”””

# Implementation details abstracted

page_text = await fetch_page_text(“https://status.aisera.com/”)

result = await process_with_llm(page_text)

return format_status_response(result)

@mcp.resource(“aisera://status”)

async def status_resource() -> str:

“””Get status information as a resource.”””

return await get_aisera_status()

@mcp.prompt()

def check_system_status() -> list[base.Message]:

“””Prompt for checking the overall system status.”””

return [

base.UserMessage(“Check the current status of Aisera services.”),

base.SystemMessage(

“Use the `get_aisera_status()` tool to retrieve the current “ +

“status and provide a concise summary.”

)

]

# Start server

if __name__ == “__main__”:

mcp.run(transport=‘stdio’)

# Client-side Example

from mcp import ClientSession

from anthropic import Anthropic

# Initialize client

client = Anthropic(api_key=“your_api_key”) #could be any LLM of your choice

async def query_aisera_status(question: str):

# Connect to MCP server

session = await connect_to_server(“./aisera_status.py”)

# Get available tools from MCP Server

tools = await session.list_tools()

# Query LLM (claude in this case) with tools available

response = client.messages.create(

model=“claude-3-7-sonnet-20250219”,

messages=[{“role”: “user”, “content”: question}],

tools=tools

)

# Handle any tool calls

if any(c.type == ‘tool_use’ for c in response.content):

tool = response.content[0]

result = await session.call_tool(tool.name, tool.input)

return result.content

return response.content[0].text

Let’s walk through the code

Our MCP Server provides three key capabilities:

- Tools – Function-like endpoints that perform actions

-

- Defined with @mcp.tool() decorator on the server

- Can accept parameters and return structured data

- Resources – Data endpoints accessed via URL-like paths

-

- Defined with @mcp.resource() decorator on the server

- Allow direct access to data (like “aisera://status”) and can include parameters.

- Prompts – Pre-defined conversation flows

-

- Defined with @mcp.prompt() decorator on the server

- Contain user messages and system instructions, and can include parameters.

In a typical MCP Client and MCP Server interaction:

- The client connects to the MCP server and discovers available capabilities

- When a user asks a question, LLM decides whether to:

-

- Call a tool directly (e.g., get_aisera_status())

- Access a resource (e.g., “aisera://status”)

- Execute a prompt flow (e.g., the “check_system_status” prompt)

- The server processes the request and returns formatted information

- The client presents this information to the user

Below is a quick video of how users can leverage MCP with Aisera. The video shows an Aisera MCP server that is used by a Claude Desktop(MCP client) to query the operational status of aisera.com services from the Aisera status page (https://status.aisera.com/). The example uses Claude Desktop(MCP client); however, any MCP client can call this MCP service using standard MCP client-server protocol.

We will release the code to implement this in a subsequent blog post.

Pros, Cons, and Outlook for MCP

Let’s evaluate the advantages and disadvantages of MCP and where it fits (or might fall short) in the broader AI landscape:

Strengths of MCP:

- Standardization and Interoperability: MCP provides a common language bridging AI systems and external data/tools. This dramatically reduces the integration effort when connecting new systems.

- Flexibility and Vendor-Agnostic Design: MCP is not tied to any single model or vendor. It’s an open protocol with an open-source reference implementation. This means you could use MCP with Anthropic’s Claude, OpenAI models, open-source LLMs, or any future model.

- Ecosystem and Reusability: The library of available MCP servers is growing as MCP gains adoption. Anthropic has already open-sourced servers for common apps (Google Drive, Slack, GitHub, databases, etc.).

- Dynamic and Contextual by Nature: MCP was specifically built to handle the dynamic needs of AI agents. That includes maintaining context between messages (persistent connection), allowing the AI to call for more information mid-conversation (through tools/resources), etc.

Challenges with MCP:

- Maturity and Adoption (Early Days): MCP is a very new standard. It was introduced in late 2024, and while it’s gained a lot of hype and some early support, it’s not yet ubiquitous.

- Indirection and Overhead: Using MCP introduces an extra layer between the AI and the end service. Instead of the AI calling a REST API directly, it’s calling an MCP server, which then calls the API.

- Missing Features / Evolving Spec: The Model Context Protocol (MCP) is still in its early stages, with some features like streaming outputs and authentication yet to be fully developed. Early adopters may need to implement some features themselves and be prepared to update their implementations as the protocol evolves.

- MCP and Statefulness: MCP’s initial stateful architecture, leveraging persistent connections (e.g., SSE) for bidirectional features like notifications and server-initiated sampling, poses challenges for serverless deployments and horizontally-scaled services needing complex routing or state persistence. A proposal is “Streamable HTTP” transport, decoupling basic stateless requests (HTTP POST) from optional stateful capabilities (notifications, sampling) handled over a disconnectable streaming channel (initially SSE). This progressive enhancement model aims to increase deployment flexibility while retaining pathways for advanced, state-dependent interaction patterns.

- Security Concerns—Prompt Injection and “Rug Pulls”: Recent discussions highlight the risks of giving LLMs access to powerful tools, especially if untrusted or malicious servers are in the mix. Attackers can exploit MCP via “rug pulls” (where a server silently redefines or intercepts tools), “tool shadowing,” or “tool poisoning” by embedding hidden instructions in tool descriptions. Such prompt injection attacks can lead an LLM to leak private data or carry out unauthorized actions. While these risks aren’t unique to MCP, the protocol’s goal of broad interoperability can inadvertently amplify the impact if client implementations don’t carefully confirm tool calls and watch for changed or malicious definitions.

The Future of MCP

MCP is great for enabling interoperability between multiple AI applications and tools, especially in enterprise settings with numerous data silos. It’s ideal for building platforms and extensible AI architectures. However, it might be excessive for simple AI assistants or single-purpose AI use cases, and it doesn’t handle agent reasoning or long-term memory on its own.

Security considerations—particularly prompt injection vulnerabilities—add an extra dimension to MCP’s future. Experts emphasize the lack of easy fixes and the importance of “human in the loop” confirmations, strong auditing of changed or updated tool definitions, and clear UI indicators for any potentially destructive tool calls. As more developers connect powerful and sensitive tools to LLMs, the protocol’s approach to trust and safety will likely shape its adoption and evolution.

The ideal path is for MCP to become a ubiquitous standard for tool integration in AI, much like USB or HTTP, while also fostering an ecosystem of responsible server and client implementations that address prompt injection and data exfiltration risks head-on, which will require the tech community to agree and align on. MCP could revolutionize AI by enabling seamless, secure integration of models and resources, leading to truly robust, context-aware AI agents.

Finally, here are some of the open-source MCP Servers I have been playing with.

| Server Name | Description/Purpose | Key Features Summary |

|---|---|---|

| Filesystem MCP Server | Interact with the local file system | Read/write files, manage directories, search files, get metadata |

| Google Drive MCP Server | Interact with Google Drive cloud storage | Search files, read files by ID, supports all file types |

| Slack MCP Server | Interact with Slack workspaces | Manage channels, messages, threads, reactions, get user info |

| Fetch MCP Server | Retrieve and process web page content (HTML to Markdown) | Fetch URL content, convert HTML to Markdown, chunking, raw HTML option, length control |