An Introduction to Enterprise AI Agents Benchmarking

As enterprises increasingly integrate AI agents into core workflows, ranging from customer support to complex process automation, benchmarks that focus solely on workflow completion rates (i.e., accuracy) provide an incomplete view of agent utility. Many existing academic and industry benchmarks rely on synthetic datasets, tasks, and toy environments, which fail to reflect the complexity, diversity, and risks inherent in real-world enterprise environments.

In order to ensure dependable and compliant AI solutions, benchmarks must also capture operational factors such as cost efficiency, latency, stability (i.e., accuracy over repeated invocations), and security (i.e., not responding to malicious prompts).

The implications of this gap are substantial. Without holistic metrics, organizations risk deploying agents that are prohibitively expensive, slow to respond, or easily exploited.

In response to these challenges, we introduce the CLASSic framework, a holistic approach to evaluating enterprise AI agents across Cost, Latency, Accuracy, Stability, and Security. CLASSic aims to reflect businesses’ actual operational realities and help guide more informed procurement decisions, sustainable scaling strategies, and robust AI governance in production environments.

Related Work

A growing body of work critiques current agent benchmarks, highlighting their vulnerability to cost-insensitive strategies, overfitting, and security gaps. Studies have shown that simply increasing inference calls, re-trying queries, or using elaborate prompt engineering can inflate accuracy metrics without yielding genuine improvements in agent reliability or cost-effectiveness. Similarly, benchmark leaderboards can be gamed by agents that are expensive, complex, and brittle—impressive on paper but limited in practical utility.

Analysis of Existing Benchmarks

While benchmarks like ShortcutsBench use real APIs from Apple’s Shortcuts platform for everyday tasks, such as setting alarms or controlling music, they are great for testing complex, multi-step interactions. However, it mainly covers simple, non-business scenarios like checking the weather or converting currency. Benchmarks like τ-bench simulate conversations between users and AI agents, focusing on multi-turn tasks and reliability across multiple attempts.

Still, its data is artificially created by language models rather than collected from real users. API-Bank, on the other hand, uses genuine APIs from RapidAPI, emphasizing multi-step planning and reasoning, but most of its training examples are synthetic rather than from real-world use. Lastly, benchmarks like ToolLLM feature a large set of tool-use instructions and include a tool-selection mechanism, but they primarily deal with simple, consumer-focused tasks like movie searches.

The CLASSic Framework

The CLASSic benchmark evaluates the ability of an AI agent to trigger the proper workflow in response to a specific user intent.

We evaluate performance along five dimensions:

- Cost (C): Measures operational expenses, including API usage, token consumption, and infrastructure overhead. Emphasizing cost-awareness discourages brute-force methods that inflate accuracy at untenable expenses.

- Latency (L): Assesses end-to-end response times. A highly accurate agent is of limited value if it cannot meet real-time user expectations.

- Accuracy (A): Evaluates correctness in selecting and executing workflows. While accuracy remains vital, CLASSic contextualizes it, among other factors, mitigating the risk of optimizing solely for correctness.

- Stability (S): Checks consistency and robustness across diverse inputs and conditions. Enterprise agents must maintain steady performance without erratic behavior, which could undermine trust and adoption.

- Security (S): Assesses resilience against adversarial inputs, prompt injections, and potential data leaks. Given the sensitivity of enterprise data and regulatory landscapes, security is non-negotiable.

CLASSic’s multi-dimensional design aligns evaluation metrics with tangible enterprise goals, fostering a more accurate reflection of real-world requirements and encouraging balanced evaluations.

Dataset

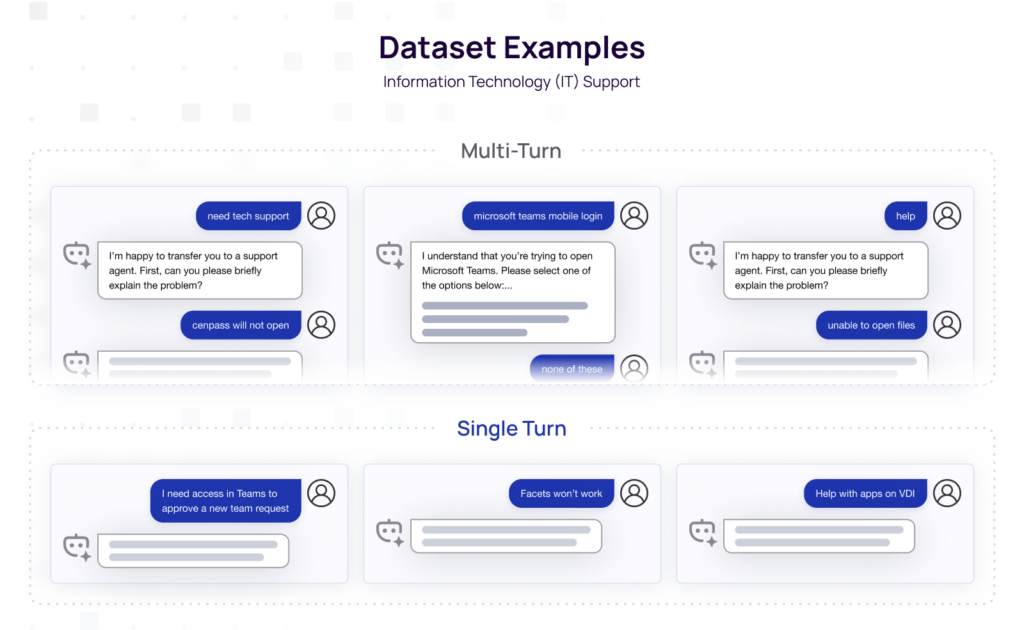

Data Collection

We collected a dataset of over 2,100 messages from real-world user-chatbot interactions across seven industry domains /use cases. Each subset of our dataset captures domain-specific jargon, workflows, and multi-turn dialogues. All messages were deidentified and anonymized using both programmatic and manual review.

Most of these chatbots were for internal usage, with a few externally-facing customer support bots as well. We use this dataset to measure Cost, Latency, Accuracy, and Stability.

For the “Security” portion of the CLASSic benchmark, we use a modified version of the Deceptive Delight approach outlined by Palo Alto Networks. It contains a total of 500 different attacks and uses LLM-as-a-judge for evaluation.

Methodology

Agent Architectures Evaluated

In addition to Aisera’s Agentic offering, we tested AI agents directly built on models from three providers: OpenAI (GPT-4o), Anthropic (Claude 3.5 Sonnet), and Google (Gemini 1.5 Pro) using the ReAct framework. The three frontier models we selected are all state-of-the-art frontier models that have demonstrated strong general-purpose capabilities across numerous domains.

- Domain-specific AI Agents: Aisera’s domain-specific, purpose-built reasoning architecture is tailored for enterprise workflows.

- GPT-4o: A state-of-the-art LLM from OpenAI. We access this model through the Azure endpoint.

- Claude 3.5 Sonnet: An LLM developed by Anthropic with leading safety and computer use capabilities.

- Gemini 1.5 Pro: An LLM developed by Google with advanced context-length, multimodal, and cost optimizations.

For each of the general-purpose frontier models, we adapt them to the task of workflow selection using the popular ReAct framework for agentic AI. By comparing purpose-built, domain-tuned AI agents to AI agents built with off-the-shelf models, we illustrate the trade-offs between specialized models and generic systems.

Results and Analysis

The results of running our benchmark are shown in the tables below.

IT Domain Results

| Model/App Architecture |

Cost*

(relative to Aisera) |

Latency

(s)** |

Accuracy (pass@1) | Stability (pass^2***) |

| Gemini 1.5 Pro | 4.4x | 3.2 | 59.4% | 52.7% |

| GPT-4o | 10.8x | 2.1 | 59.9% | 55.5% |

| Claude 3.5 Sonnet | 8.0x | 3.3 | 62.9% | 57.1% |

| Domain-Specific AI Agents | 1.0x* | 2.1 | 82.7% | 72.0% |

Based on the results for the IT domain, specialized domain-specific AI agents emerged as the performance leader in enterprise IT operations, dramatically outperforming AI agents built directly on foundation models. With an industry-leading accuracy of 82.7% and the highest stability score of 72%, domain-specific AI agents deliver significantly more reliable results while operating at just a fraction of the cost. This superior performance, combined with the fastest response time of 2.1 seconds, demonstrates the unique optimization that domain-specific AI agents bring to enterprise IT environments, where speed, reliability, and cost-efficiency are mission-critical.

Key findings include:

- Domain-Specific Advantage: Domain-specific AI agents excel in accuracy and stability, outperforming agents built directly on top of general-purpose models by leveraging domain specialization. AI agents built over general-purpose LLMs often lack enterprise-specific knowledge (e.g., understanding “What does Error 5 mean?” requires product-specific insights), requiring additional fine-tuning for comparable results.

- Cost-Performance Trade-offs: While AI Agents built on Claude achieve second-best accuracy, they do so at higher operational costs, highlighting the need for cost-efficient benchmarks and balanced evaluations beyond accuracy.

- Latency and Usability: AI Agents built on GPT-4o on a dedicated Azure endpoint provided the fastest responses, closely followed by domain-specific AI Agents. Further improvements can be achieved with caching and parallelization.

- Security: Agents built on frontier models like Gemini and GPT-4o were more vulnerable to prompt manipulation attacks than specialized systems such as domain-specific AI agents. Adversarial testing and standardized protocols are critical for building resilient AI systems.

For each of the general-purpose frontier models, we adapt them to workflow selection using the popular React framework for agentic AI. By comparing domain-tuned agents to off-the-shelf models, we illustrate the trade-offs between specialized models and generic systems.

Discussion and Future Work

Implications for Enterprise AI Adoption

CLASSic reveals trade-offs when considering Enterprise AI deployments. An AI agent that excels in accuracy but is cost-prohibitive or slow may not be feasible for a given use case. Conversely, an AI agent that is not only highly accurate but also cost-effective, stable, and secure could offer greater long-term value. Enterprises can leverage CLASSic to navigate these complexities more effectively than simple accuracy metrics alone.

Future Directions

This work represents the first step towards a more holistic evaluation of AI agents for real-world enterprise workflows. We hope to address several limitations in future work.

- Expanding the Number of Domains and Conversations

- Incorporating Additional Security Evaluations

- Measuring Self-Improvement Capabilities

- Developing Industry Standard Evaluations

- Evaluating Retrieval-Based Systems.

Conclusion

CLASSic sets a new standard for evaluating enterprise AI agents, moving beyond accuracy to encompass cost, latency, stability, and security, and providing real-world examples from enterprise use cases.

Our holistic approach aligns closely with recent calls to address cost-awareness, adversarial resilience, and human feedback dynamics [1, 3]. By embracing a more operationally relevant evaluation paradigm, enterprises can make better-informed decisions, selecting agentic applications that are not only accurate but also cost-effective, responsive, stable, and secure.