What are Small Language Models?

Small Language Models (SLMs) are a type of highly efficient and specialized AI model that understand and generate human language. With millions to a few billion parameters, they are much smaller than Large Language Models (LLMs). This compact architecture allows SLMs to deliver great performance on specific tasks without needing lots of compute, making them perfect for on-device, enterprise, and real-time use cases where speed, cost, and data privacy matter.

Unlike large language models, SLMs are optimized for efficiency. They are designed to do targeted language tasks accurately without big AI infrastructure, GPUs, and memory, so they can be used in real-time, privacy-sensitive, or bandwidth-limited scenarios. In this article, we’ll dive into how SLMs work, what makes them different from big foundation models, their use cases, and why they are the future of AI.

Why are SLMs Becoming a Major Trend in AI?

Despite their small size, SLMs can handle a wide range of natural language processing (NLP) complex tasks like machine translation, sentiment analysis, and text generation. Their lower hardware requirements mean less energy consumption and more sustainability, a key consideration in modern AI system design.

As large language models like GPT-4 and Claude get increasingly complex, they require more AI infrastructure (especially GPUs), energy, and oversight. That raises questions around scalability, privacy, and cost. Here is where SLMs become trending in AI, so companies are looking into smaller, more specialized models that deliver high performance without the overhead.

SLMs are the answer; they are faster to deploy, easier to fine-tune on your own data, and more aligned with real-world business use cases. These efficient models are designed to do one thing well while using fewer resources than larger models. Whether it’s IT operations, customer support, or healthcare workflows, SLMs give you targeted insights while minimizing latency and infrastructure cost.

SLM vs. LLM: A Head-to-Head Comparison

Both SLMs and LLMs are built on the same principles, but their differences in scale lead to fundamentally different strengths, costs, and applications. The choice between them is entirely dependent on the business problem you need to solve.

To answer this, we’ll look across six dimensions: Scope, Accuracy, Performance, Cost, Security, and Customization.

| Feature | Small Language Models (SLMs) |

Large Language Models (LLMs)

|

| Scope & Focus | Narrow and Deep: Trained on curated data for specific domains. |

Broad and General: Trained on vast, internet-scale data.

|

| Accuracy | High accuracy on specialized tasks; low risk of hallucination. |

High general knowledge; can hallucinate on niche topics.

|

| Performance | Fast & Low Latency: Ideal for real-time, on-device use. |

Slower inference; requires powerful cloud infrastructure.

|

| Cost | Low: Cheaper to train, fine-tune, and operate. |

High: Expensive infrastructure and operational costs.

|

| Security & Privacy | High: Can be deployed on-premises for maximum data control. |

Lower: Often reliant on third-party APIs, raising privacy concerns.

|

| Customization | Agile: Easy and fast to fine-tune on proprietary data. |

Complex: Resource-intensive and slow to fine-tune.

|

1. Scope and Training Data

LLMs like GPT-4 are trained on massive, diverse internet-scale datasets, so they are versatile across a wide range of topics. But this general-purpose design can lead to poor model performance in domain-specific complex tasks where industry jargon, regulations, or workflow nuances are key.

SLMs are trained on smaller, more focused datasets tailored to specific domains or enterprise knowledge bases. So they are better suited for tasks that require precision, contextual relevance, and organizational alignment.

2. Accuracy and Hallucination Control

One of the downsides of LLMs is the risk of AI hallucination, generating responses that are fluent but factually incorrect. This is often due to the broad, uncontrolled nature of their training data.

SLMs mitigate this by being fine-tuned on curated, domain relevant data. When combined with techniques like knowledge distillation and Retrieval-Augmented Generation (RAG), small models can deliver high accuracy outputs with reduced hallucination risk, even approaching LLM-level performance in specific NLP tasks.

3. Performance and Deployment Flexibility

Due to their massive parameter count, LLMs require high performance AI infrastructure, GPUs or TPUs, and are typically deployed via cloud APIs. SLMs are compact and lightweight so they are perfect for on-device, edge, or offline deployments. Their smaller size means faster inference times and lower latency, which is critical for real-time applications like virtual assistants and chatbots.

4. Cost and Operational Efficiency

LLMs have high operational costs due to their compute requirements, especially when scaled for enterprise use. This includes training and inference costs and associated infrastructure expenses.

SLMs are a more cost-effective alternative requiring fewer resources for training and deployment. So they return a better ROI with AI applications and are ideal for enterprises that want AI capabilities without the budget overhead of large models.

5. Security and Data Privacy

LLM Security has always been a concern, as deploying AI models via external APIs can put organizations at risk of privacy and data security breaches, especially when handling sensitive or regulated data. SLMs deployed in secure on-premises environments have more control over data flow. Their smaller size makes them easier to audit and manage from a compliance perspective and reduces the risk of sensitive data leakage.

6. Customization and Fine-Tuning

LLMs can be fine-tuned but this often requires significant compute resources and data preparation. And even then fine-tuning may not fully align with business specific needs.SLMs are more customisable. With the right data science skills they can be adapted with domain specific, fine-tuning and RAG pipelines to improve context and performance. Both together means relevance, precision and alignment to business goals.

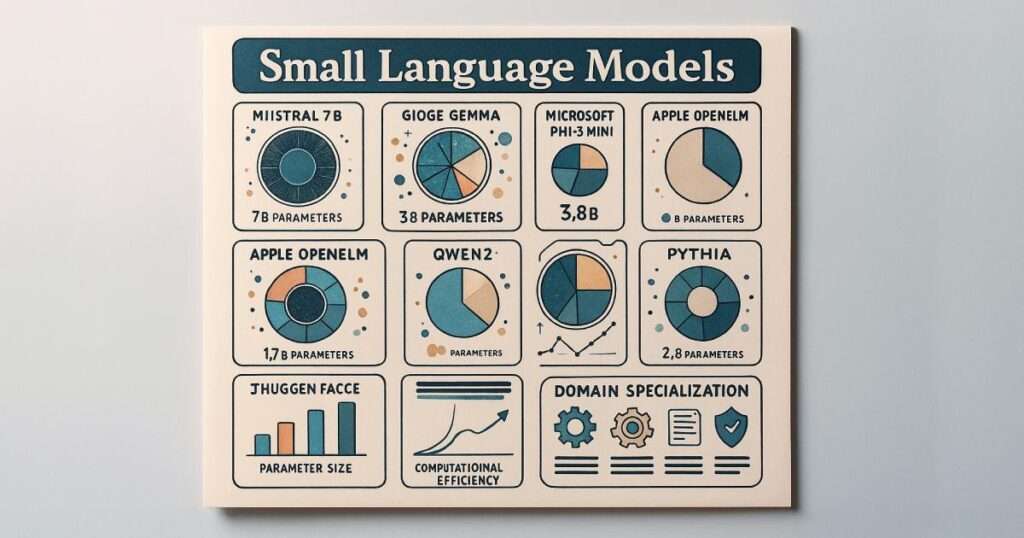

Popular Examples of Small Language Models

The versatility and operational efficiency of Small Language Models are best demonstrated through their applications in domain-specific tasks and resource-constrained environments. These models excel in focused use cases such as code generation, content creation, and task-specific dialogue, where they sometimes outperform larger models due to their precision and specialization. Below, we explore three compelling examples: domain-specific models in healthcare, micro models for customer support, and the high-performing phi-3-mini model.

-

Phi-3 Mini: A High-Performance Lightweight Model

One of the newest SLMs is phi-3-mini. With 3.8B parameters trained on 3.3T tokens, phi-3-mini matches the performance of larger models like Mixtral 8x7B and GPT-3.5. It scores 69% on MMLU and 8.38 on MT-Bench and is small enough to run on mobile devices.

It’s all about the data curation – filtered web content and synthetic datasets – to ensure safety, accuracy, and robustness in dialog. The model uses self-attention to focus on semantically important tokens and a student-teacher training approach to distill knowledge from larger models. This allows phi-3-mini to produce high-quality output at a fraction of the inference cost, showing the practicality of SLMs in real-world AI deployments.

-

Mistral 7B

A general-purpose transformer model from Mistral AI. Despite being 7B parameters, it’s optimized for efficiency across language understanding and generation tasks.

-

Google Gemma

The Gemma series includes models like Gemma 2B and Gemma 7B, which is a trade-off between model size and performance. These models are good for research and production.

-

Apple OpenELM

OpenELM (Efficient Language Models) are built for on-device inference, with sizes ranging from 270M to 3B parameters. They are optimized for memory efficiency and low-latency mobile apps.

-

Qwen2

Developed by Alibaba, Qwen2 is a multilingual model family with sizes from 0.5B to 7B, supporting various language tasks and deployment scenarios.

-

LLaMA 3.1 8B

Meta’s LLaMA 3.1 8B is a compact model that retains LLM-level capabilities. It’s good for fine-tuning and domain adaptation.

-

Pythia

A series of open models focused on interpretability, coding, and reasoning, with sizes from 160M to 2.8B parameters. Pythia is used in academic and benchmarking settings.

-

SmolLM2-1.7B

Developed by Hugging Face, this model is trained on curated open datasets and is the latest in efficient model design for targeted NLP tasks.

How Do SLMs Work? The Technology Behind the Efficiency

Small Language Models (SLMs) are defined by a reduction in parameter count—tens to hundreds of millions—compared to Large Language Models (LLMs) with billions or trillions. This smaller size allows SLMs to deliver task-specific performance while keeping language understanding and generation capabilities.

Efficient Design

SLMs are designed to be computationally efficient. Their smaller size means they can run in resource-constrained environments, mobile devices, edge hardware and low-footprint cloud deployments. This also means processing data locally, which is critical for IoT systems and organizations in regulated industries.

Despite being smaller, SLMs can perform as well as larger models on narrow tasks. This is achieved through model compression, which reduces model complexity without degrading performance,e and architectural simplifications that speed up inference time.

Optimization Through ML Techniques

SLMs use a range of machine learning techniques to optimize efficiency and performance:

- Knowledge Distillation: A smaller model (student) is trained to mimic a larger pre-trained model (teacher), inheriting its accuracy and reasoning with fewer parameters.

- Transfer Learning: SLMs are pre-trained on large general corpora then fine-tuned on domain-specific datasets. This two-phase training process enables strong performance with minimal data and reduces training costs.

- Pruning and Quantization: Pruning removes redundant weights, and quantization reduces numerical precision. Both shrink model size and inference latency without impacting accuracy much.

- Low-Rank Factorization: Matrix decomposition reduces the parameter space, further speeding up and improving memory efficiency.

These techniques work together to create compact yet capable models that are faster to train, easier to deploy, and more adaptable to domain-specific requirements.

Interpretability and Iteration Speed

The simpler structure of SLMs also makes model interpretability easier, so data scientists can debug, audit, and explain decision-making paths. Also, smaller architectures speed up development cycles, fine-tuning, and adapting to new data trends or operational changes.

But tuning is key. Too much compression or pruning can degrade performance, so optimization has to find a balance between model efficiency and task-specific accuracy.

Small Language Models Key Use Cases: Where SLMs Excel

Because of their speed and specialisation, SLMs can be the future of agentic AI, powering autonomous systems that excel at specific high-value tasks. They can operate on devices and with low latency, so they are perfect for applications where immediate action and decision are required.

1. Customer Service Automation:

SLMs power AI assistants to have natural conversations, handle FAQs, and provide end-to-end support for customer service automation and customer experience, and operational efficiency. Light models are perfect for this use case as they are low compute and high performance, and can run on a mobile device.

- Micro Language Models for Customer Support: Micro Language Models or Micro LLMs represent a class of SLMs purpose-built for high-traffic customer support scenarios. These models are fine-tuned to understand recurring customer queries, product-specific terminology, and company policies, enabling them to respond accurately to a wide range of customer needs.

For instance, an IT services firm might implement a Micro LLM trained on historical support tickets, product documentation, and troubleshooting guides. This allows the model to autonomously handle common issues, provide guided steps for resolution, and escalate complex cases to human agents. The result is reduced response latency, improved customer satisfaction, and better agent utilization.

2. Domain-Specific SLMs in Healthcare

A prominent example of SLMs in action is their use in the healthcare sector. Domain-specific models are fine-tuned from general-purpose base models to specialize in medical terminologies, procedures, diagnostics, and patient communication. Trained on structured and compliant datasets—such as medical journals, anonymized clinical records, and healthcare literature, they deliver contextually accurate outputs tailored to clinical needs.

Applications include summarizing electronic health records (EHRs), generating diagnostic insights from symptom descriptions, and synthesizing research publications for clinicians. Due to the critical nature of healthcare, these models are evaluated not only on language understanding but also on mathematical reasoning tasks that underpin medical data interpretation. Embedding techniques help retain the positional and semantic integrity of medical terms, contributing to their high performance in real-world healthcare deployments.

3. Other SLMs Use Cases

- Language Translation Services: These small models do real-time language translation to bridge the language gap during international communications and interactions.

- Sentiment Analysis: These models do sentiment analysis to gauge public opinion and customer sentiment, and provide feedback to adjust marketing strategies and product offerings.

- Market Trend Analysis: SLMs help businesses to optimize their sales and marketing strategies to have more targeted and effective campaigns.

- Innovative Product Development: With data analysis capabilities, SLMs enable companies to innovate and develop products that meet customer needs and preferences.

Small Language Model Limitations

While Small Language Models offer targeted efficiency and deployability, they’re not without trade-offs. Knowing these limitations is key to aligning model capabilities with enterprise needs.

1. Niche Focus and Limited Generalization

The domain-specific focus that gives SLMs their efficiency also limits their scope. Trained on narrow datasets, SLMs often lack the general world knowledge in large models. So they may underperform on open-ended questions or unknown topics.

To meet broader needs, organizations may need to deploy and manage multiple SLMs, each fine-tuned for a specific domain or task. This adds architectural complexity and integration challenges across the AI stack.

2. Rapid Innovation and Resource Constraints

The NLP landscape is moving fast, with new model designs, training techniques, and LLM evaluation emerging all the time. Staying current with these innovations and keeping deployed models performant requires ongoing technical investment.

Also, fine-tuning and maintaining SLMs require specialized skills in machine learning, data preprocessing, and model evaluation. Not all organizations have the in house expertise to fully leverage SLM customization, which may limit adoption or effectiveness.

3. Evaluation and Model Selection Challenges

As interest in small language models grows, the market is seeing a flood of new SLMs. Each model is optimized for different tasks, datasets, or architecture,s so performance comparisons are tricky.

Also, benchmark scores are misleading. Without careful analysis of parameter size, token distribution, training data quality, and inference constraints, organizations may struggle to choose the right model for their needs. Choosing the right SLM requires domain knowledge and understanding of practical deployment considerations.

The Future of Small Language Models

We are moving away from the “one model to rule them all” philosophy toward orchestrated agent systems. By assigning specific SLMs to distinct roles within an agentic workflow, such as planning, tool usage, or verification, we create a system that is greater than the sum of its parts.

While Large Language Models dominate the cloud, the future of Agentic AI lies at the edge, and Small Language Models are the key to unlocking it. Standalone SLMs often hit a ceiling when faced with multi-step AI reasoning, but a modular, agentic approach removes these barriers.

Instead of relying on one monolithic model, developers are now building multi-agent systems. Here, specialized SLMs are chained together to form autonomous workflows:

- Agent A (Router): Instantly triages user intent.

- Agent B (Retrieval): Fetches domain-specific data.

- Agent C (Synthesizer): Formulates the final response.

This distributed agentic framework maximizes accuracy and drastically reduces latency, allowing complex, intelligent behaviors to run locally on devices without the massive computational overhead of traditional LLMs.

Conclusions

In summary, comparing Small Language Models or domain-specific LLMs to LLM-based generative AI highlights the importance of customizing AI models for industries. As companies deploy AI-driven solutions like Conversational AI platforms in their industry-specific workflows, developing domain-specific models becomes crucial. These custom models will deliver better accuracy and relevance and amplify human expertise in ways that generic models can’t.

Bringing SLM Solutions to Your Enterprise with Aisera

With these advanced industry-specific AI tools, industries from healthcare to finance are on the cusp of unprecedented efficiency and innovation. Try a custom AI demo and check out Aisera’s domain-specific AI agents today!