An Introduction to AI Annotation

In the ever-evolving landscape of Artificial Intelligence (AI), the role of data annotation in AI, and by extension, data annotators have become increasingly indispensable. Their job might not be in the limelight, but it is their meticulous work that sets the success for AI foundational model development.

In this article, we take a deep dive into the pivotal role of data annotation in the calibration of AI Models for Enterprise Virtual Assistants.

What is Data Annotation in AI Models?

In simple terms, AI annotation also known as data annotation is the process of assigning “annotations” or “labels” to specific pieces of data that enable AI systems to learn from it effectively. This can include techniques like active learning, where the AI model suggests data labeling, or using AI tools to pre-label data, which human annotators then review.

These data can come in different formats such as images, videos, text, and audio. The role of the data annotator is to identify and highlight critical information within the data and tag it with pertinent labels to make it interpretable to AI systems.

For instance, if an AI model is being developed for an AI assistant specifically to recognize personally identifiable information (PII), the role of a data annotator becomes critical. They would need to carefully label each piece of PII, like names, social security numbers, mail addresses, bank account numbers, credit card numbers, and more. These labels or tags then equip the AI model to identify and categorize any given text as PII when it comes across such data in unseen text.

The journey of AI begins with the processing and understanding of real-world data and that’s where data annotation comes into the picture. It plays a critical role in transforming raw, unstructured data into a well-structured, machine-readable format, thereby forming the bedrock upon which robust and accurate AI systems are built. Thus, the dedicated task of the right data annotation tool enables the practical application and success of AI initiatives across a myriad of industries.

Why Data Annotation is Important?

In the bustling realm of Artificial Intelligence, one of the fundamental stepping stones that pave the way to successful AI deployments is high-quality annotated data. If you’ve ever heard the saying, “Garbage in, Garbage out,” you’ll know it’s a phrase that aptly captures the concept of how the quality of data collection, directly influences the functionality and efficiency of AI and machine learning models. In essence, the data you feed into an AI model to perform any given task has a direct bearing on the quality of the AI’s output – fantastic data yields fantastic results, while poor data quality often produces lamentably inferior results.

With this crucial truth in mind, it’s easy to grasp why the topic of data annotation has taken center stage in discussions revolving around AI. The AI community has awakened to the profound realization that without coherent, well-labeled data, their pursuit of constructing an effective AI model is, in fact, a wild goose chase.

Unraveling the Technicality and Ethicality of Data Annotation

Data annotation stands at the crossroads of two significant facets of AI – technicality and ethicality. As such, it wields immense power in shaping the perspectives generative AI and machine learning systems and models will adopt, becoming the fulcrum and the scale that balances technical proficiency with ethical responsibility.

Technical Aspect of Data Annotation

On the technical side, AI annotation is a procedure in which data annotators assign applicable labels to data points. Data that can be in different forms such as text, audio, video, or images is inspected, and pertinent information is highlighted or tagged. This operation makes raw data understandable to AI and machine learning algorithms.

The role of data annotators in this regard goes beyond just label assignment. Their expertise lies in understanding the nuances and intricacies of the data — for any given industry, domain, or organization –, and knowing which fragments are essential for the AI to comprehend and learn from. The accuracy of their annotation directly impacts the performance of the AI model, oftentimes being the differentiator between an effective and ineffective model.

For example, consider the development of an AI model for a virtual assistant. This model is designed to classify user requests into distinct categories – ‘actionable’ and ‘ambiguous’. In this context, data annotators play a critical role in labeling user requests and identifying specific characteristics that highlight their context. The accuracy of these labels dramatically impacts the AI assistant’s ability to appropriately categorize similar ambiguous requests in future user interactions.

If the data labeling is incorrect, it could skew the AI model’s performance, leading it to misinterpret an ambiguous user request as actionable. Such misinterpretations can have significant consequences. If the system erroneously deems a request to be actionable, it will attempt to provide a resolution, assuming it has all the necessary information. However, because the initial request was ambiguous and lacked specific details, the generated resolution could be inappropriate.

Therefore, precise and accurate semantic annotation is not just desirable but indispensable for creating an effective artificial intelligence model to avoid AI mistakes and AI hallucinations.

Ethical Aspect of Data Annotation

The ethical implications of AI annotation are critical, particularly when the annotations themselves can introduce bias into AI models. For example, AI tools used in annotation must be carefully designed to avoid perpetuating existing biases in the data, such as using diverse datasets and fair labeling services.

Bias in AI models is often a reflection of the biases present in the annotated data they learn from. It is, hence, the annotator’s responsibility to be vigilant and conscious during the annotation process, ensuring that labels do not reflect any personal biases, uncompliant acts, stereotypes, or discriminatory practices. Failure to do so can result in AI outcomes that are unfair or unjust.

A notable case is when AI models exhibit racial or gender bias as these models learned from data that was annotated without due consideration to these aspects. In such situations, the bias in outcomes isn’t a technological failure, rather it represents an ethical failure in data annotation.

Privacy is another critical ethical issue. Annotators frequently work with sensitive data, where individuals’ privacy could be compromised if not handled properly. Techniques such as anonymization and pseudonymization are employed to ensure that data is utilized for machine learning without infringing upon individual privacy rights.

In sum, data annotators hold an inherent dual role. They cater not only to the technical aspect of making data understandable and accurate to AI but also have the onus to ensure the ethical integrity of AI models. Ensuring equal emphasis on these two realms during data annotation will enable the creation of responsible AI models.

AI Annotation Techniques for Virtual Assistants

In today’s digital landscape, virtual assistants have evolved to provide a seamless omni-channel experience for users. This means that users can interact with virtual assistants through various channels like text, video, and voice. To enhance the user experience and provide better assistance, users often share images or video recordings with virtual assistants to provide additional context to the problems they are facing. This has led to virtual assistants being equipped to handle different types of data, including text, image, audio, and video, to perform their AI tasks effectively.

In this section, we will explore the popular types of data annotation tasks performed by data annotators for each of these data types.

Text Annotation

Text data annotation is used for NLP in virtual assistants so that AI models can understand what users are saying and act on their text requests. The most popular types of text data annotations for virtual assistants are:

- Sentiment Analysis: Annotators annotate text data based on the sentiment it represents, i.e., positive, negative, or neutral. This allows AI models to understand the sentiment behind user queries or feedback, enabling virtual assistants to respond appropriately by sentiment annotation.

- Intent Recognition: Text annotation is based on intent, i.e., confirmation, command, request, etc. This helps AI models classify user intents accurately and provide the desired response.

- Named Entity Recognition (NER): Annotators tag parts of speech (nouns, pronouns, verbs, adverbs, etc.), key phrases, and names as entity annotation. This enables AI models to identify and extract important information from user queries or statements.

- Linguistic: Annotators tag emphasis, natural pauses, definitions of words, related words, and replacement words. This helps AI models understand the nuances and context of user messages, allowing virtual assistants to provide more accurate and contextual responses.

For example, “In text annotation, AI-driven tools can assist annotators by suggesting sentiment labels or entity recognition tags based on pre-trained language models. This combination of human expertise and AI capabilities is what defines AI annotation.

Audio (Voice) Annotation

Audio (Voice) Annotation is the annotation type used to produce training data for voice-enabled virtual assistants. The most popular types of audio data annotations for virtual assistants are:

- Speech-to-Text Transcription: Annotators listen to the audio and tag each word, training an AI model to identify words from audio files and create a transcript. This enables virtual assistants to understand and respond to user queries or commands received in audio format.

- Natural Language Utterance: Annotators work with samples of human speech based on natural language processing (NLP) and tag them for dialects, emphasis, context, etc. This helps AI models understand variations in human speech patterns and accents, allowing virtual assistants to provide accurate and natural responses.

- Speech Labeling: Annotators tag different segments of an audio file with keywords representing objects, tasks, etc. This enables virtual assistants to identify specific actions or entities mentioned in an audio input and respond accordingly.

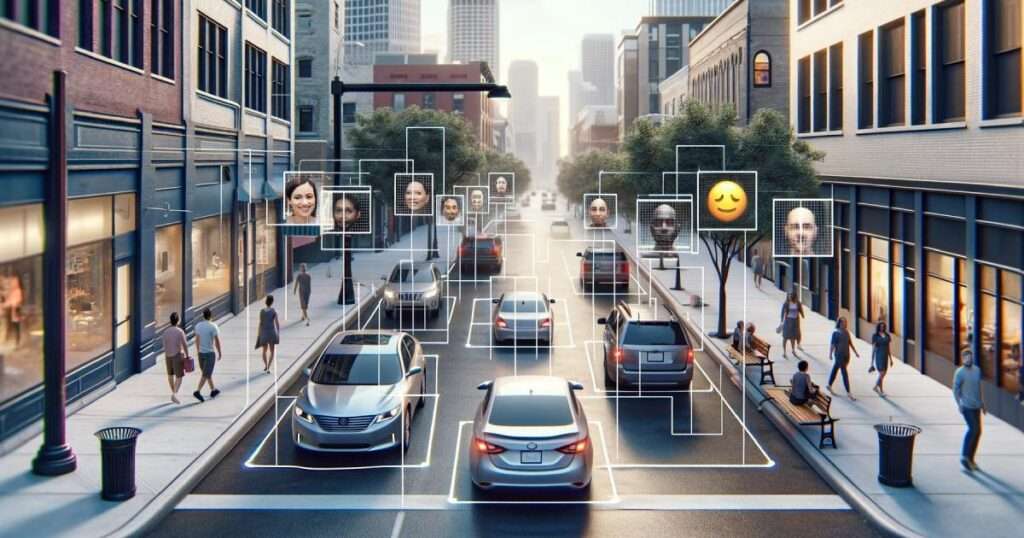

Video Annotation

Video data has all three components. For virtual assistants, the most popular types of video annotations are:

- Object Detection: Annotators identify, and label objects present in the video frames, such as shell screen, shell commands, etc. This helps AI models recognize objects and understand their context in the video, also tracking objects in the video to enable virtual assistants to provide more accurate and context-aware responses.

- Emotion Recognition: Annotators analyze the facial expressions and body language of the user to determine the emotions expressed in the video, such as happiness, sadness, or anger. This helps AI models understand the emotional context of the individual, enabling virtual assistants to respond with empathy or adjust their behavior accordingly.

Image Annotation

Image Annotation is used for various AI tasks, including object recognition and detection, labeling images, classification, and segmentation. The most popular types of image annotation tools are Image Semantic Segmentation and Object Classification. Annotators annotate and separate different objects or regions in an image, allowing AI models to understand the boundaries and relationships between various objects present in the image.

Object classification then helps virtual assistants to focus on the important objects present in images (like shell windows and associated shell commands, or a table in Microsoft Excel, etc.) to extract meaningful information and hence provide more targeted and precise responses or actions based on such insights.

Data Annotators: Tailors of Enterprise AI Models

Data annotators perform an invaluable task of shaping the datasets that AI Models need to operate effectively, especially within enterprise environments. Their work involves labeling tools and curating data to calibrate and tailor the behavior of AI Models for a wide range of enterprise-specific tasks.

This includes generating specific ontologies to help models understand industry-specific lexicons; recognizing key intents to ensure user requests are properly understood; detecting and labeling sentiments to gauge customer emotions; categorizing user requests to help models address common issues accurately; classifying domains within customer queries to streamline services; predicting ticket fields automatically to enhance efficiency in customer service; and much more. All these datasets, expertly prepared by data annotators, make AI Models more effective, and accurate—ultimately contributing to more intelligent workflows within enterprises.

The following examples illustrate the crucial role of data annotators in various segments of data preparation for an enterprise virtual assistant:

Ontology Formation

Multiple functional sectors operate simultaneously within a corporate enterprise, frequently referred to as enterprise domains. These domains can range from HR and IT to Legal, Procurement, Finance, and more. Annotators play a key role in accumulating and refining data, enabling language models to fully comprehend specific linguistic norms, term interrelations, and domain-specific terminologies.

For instance, in the IT domain, terms like “bug”, “patch”, or “malware” have different meanings than in the common language. Similarly, in Finance, “liquidity”, “equity”, or “amortization” have definitions. The role of annotators includes ensuring that language models can accurately interpret such terms within their respective domain context.

Intent Recognition

Recognizing the central motive or intention in any user interaction or communication is paramount, this is referred to as intent annotation. Take for instance, in the IT domain, a user query may be “My email is no longer syncing”, the underlying or intent annotation is to resolve the email syncing issue.

Similarly, in an HR context, an employee might request “Need information on maternity leave policy”, with the primary intent of gathering details on the maternity leave provisions. Data annotators are tasked with spotting these key intents and tagging them accurately. Consequently, this enables AI models to address and serve the user requirements correctly.

Domain Categorization and Training Data

In the context of a multi-domains virtual assistant setup, each enterprise domain usually has a dedicated virtual assistant, highly specialized in the distinct field. To ensure that a user request is correctly routed to the appropriate assistant, annotators train AI models to sort user requests into these specific business domains.

An example could be a request for a change in W-2 elections which would be classified under the HR domain, or a query for computer vision troubleshooting that would be tagged under the IT domain. This delineation allows for the sophisticated AI system to direct the user’s inquiries to specialized, domain-specific virtual assistants or human service agents when necessary.

Sentiment and Empathy Detection

This process involves labeling data based on emotions and empathetic responses within user communication, helping AI models to understand human emotions better, annotate data, and use it to dynamically adjust the tone and writing style in their responses back to the user. For instance, annotators can tag a user’s feedback as “happy”, “frustrated”, “confused”, etc., thus aiding in the model’s ability to respond appropriately.

Request Disambiguation

this requires data annotators to meticulously sift through user requests and ascertain their statuses as either actionable as-is or ambiguous. Their role is pivotal to the optimization process for virtual assistants across various enterprise domains such as IT, HR, Procurement, and Finance.

The data preparation process involves deciphering the vagueness in user requests like “Install Teams”, delineating it as being ambiguous due to the missing requisite details such as the type of device and its particular operating system. This level of detailed data preparation is of utmost importance in preventing the misclassification of requests.

An incorrect classification could force the virtual assistant into providing a rushed and likely erroneous response due to lacking essential specifics. Such mistakes could not only degrade the quality of service from the digital assistant but also elevate user frustration through unmet expectations.

Ticket Field Auto-Prediction

This involves annotating data to predict ticket fields automatically. For example, a data annotator may note patterns of relevant data, such as certain keywords or phrases, in tickets related to password reset requests and annotate these data points accordingly.

AI systems can then use these annotations to reroute similar issues to the correct department, reducing the need for manual routing and intervention. This reduces redundancy in service agent queues and resolves the same issue faster.

Quality Control in AI Annotation

Quality control in AI annotation can involve AI tools that detect inconsistencies or errors in labeled data, such as using machine learning algorithms to flag outliers or suggest corrections. Additionally, AI can be used to perform cross-validation checks, comparing different annotations to enhance consistency.

The importance of quality control in annotating data lies in its ability to identify and rectify errors, inconsistencies, and biases in the annotated data. When data annotators work on large volumes of data, mistakes are inevitable, ranging from mislabeling to incomplete annotations. Therefore, implementing robust quality control processes is crucial to minimize these errors and guarantee the integrity of the annotated datasets.

Achieving high-quality data annotation involves several key strategies and techniques. One such approach is the use of multiple annotators for the same data. These data annotation methods allow for comparison and agreement among the annotations, highlighting any discrepancies that need to be resolved.

By incorporating the opinions and judgments of different annotators, the chances of errors can be significantly reduced. Additionally, employing a consensus-based approach, where annotators discuss and collectively decide on the correct annotations, further improves the quality and accuracy of the annotated data.

Another technique for quality control is the introduction of a gold standard dataset. This dataset consists of pre-annotated, high-quality examples that serve as a benchmark for the annotators. By comparing their annotations with the gold standard, annotators can receive feedback on their performance and make the necessary adjustments. This iterative feedback loop helps in improving the annotation precision and consistency over time.

Furthermore, regular audits and evaluations of the annotated data are essential to maintain quality control. These audits can be done by experienced annotators or quality assurance specialists who review a sample of the annotated data to assess its accuracy and adherence to the annotation guidelines. Any issues or discrepancies identified during this process can be addressed promptly, ensuring the continuous improvement of the annotation quality.

Implementing quality control measures also involves proper documentation of data annotation requirements and guidelines and clear communication channels between data annotators and project managers. Well-defined guidelines provide clear instructions and criteria for annotations, reducing ambiguity and variability in the annotation process. Regular training sessions and communication enable annotators to stay updated on any changes or clarifications in the guidelines, improving consistency across the team.

In addition to these strategies, leveraging technology can enhance quality control in data annotation. Automated tools for error detection, such as spell checkers and consistency checkers, can be utilized to identify potential mistakes or inconsistencies in the annotations. These data annotation software and tools help streamline the quality control process and reduce manual effort.

In summary, quality control in data annotation is crucial for ensuring accurate and reliable training data for AI models. Through the use of multiple annotators, gold standard datasets, audits, clear guidelines, and technology, the quality and consistency of annotated data can be improved. By consistently implementing and monitoring quality control measures, virtual assistants can deliver more accurate and efficient interactions, ultimately enhancing the user experience.

The Cost-Benefit of Data Annotation

Hiring data annotators comes with an array of benefits, prominently being enhanced model efficacy, usability, and performance. However, for more considerable projects with complex requirements, costs may surge, factoring in training, data quality control, and data security and privacy.

Conversely, not employing data annotators has potential drawbacks. It can lead to unstructured, incorrectly labeled data resulting in inefficient, biased, and poorly performing AI models. There is also likely to be a lesser understanding of intricate domain-specific LLMs terminologies leading to ambiguities and inaccuracies in model functioning.

The critical question is, does the benefit outweigh the cost? By examining the role of data annotators carefully, it’s clear that their contribution to the accuracy, precision, and relevance of LLMs is substantial, thereby maximizing the potential of AI in an enterprise setting.

While the costs may seem high, the value brought by these experts to an AI project cannot be overstated. Hence, investing in data annotators is not merely about cost, but about guaranteeing the success and efficacy of AI initiatives.

Future Trends in AI Annotation

In an increasingly AI-driven world, the significance of AI annotation has skyrocketed as a critical enabler of emerging AI trends. With the rapid deployment of AI applications across diverse domains such as information technology, human resources, healthcare, security, manufacturing, banking, and more.

As we look towards what 2024 and beyond holds for data annotation, it’s evident that the sector is morphing into a colossal industry with plenty of captivating developments on the horizon.

Tailored Annotation for Industry-Specific Requirements

A notable wave sweeping the data annotation landscape is the escalating demand for annotation solutions that are meticulously tailored to address industry-specific complexities. As AI applications continue to evolve and diversify across sectors, it’s becoming critically important to have data annotation solutions that cater to the distinct needs of different sectors.

The Role of AI Annotation in Retrieval-Augmented Generation (RAG) Models

AI annotation is essential for enhancing Retrieval Augmented Generation (RAG) models and agentic RAG systems, which combine information retrieval with generative AI to produce contextually relevant responses.

By ensuring that the underlying data is accurately labeled and structured, AI annotation improves the quality of the information retrieved, leading to more precise and context-aware outputs. Additionally, annotated data helps train RAG and fine-tuning LLMs to better handle diverse data types, reduce AI hallucinations, and minimize bias, making them more effective across applications like customer support, healthcare, and legal services.

The Leap Towards Semi-Automated Annotation

Automation has been a long-standing vision for many industries, and data annotation is no exception. 2024 is set to witness notable strides towards semi-automation within data annotation processes. While full automation remains a tricky feat for more complex tasks, AI-fueled data annotation tools are gradually carving a niche for themselves, helping speed up processes and improve workflow. Alongside enhancing efficiency, this move also curtails human errors, translating into a valuable cost-saving measure for enterprises.

Fostering Ethical AI through Fair Data Annotation Practices

The importance of ethical AI and bias mitigation cannot be overemphasized. Similarly, in data annotation, there is a growing emphasis on ensuring the process is fair, unbiased, and transparent. Manual labeling introduces the risk of bias, as it’s dependent on the subjective interpretations of the annotator, and these biases can lead to skewed and discriminatory outcomes. To counter this, nowadays data annotators are asked to meticulously implement best practices and guidelines to ensure fairness, transparency, and impartiality.

Multimodal Annotation on the Rise

As Multimodal AI, which employs LLM embeddings to interpret varying data types like audio, text, video, and images, picks up speed, it looks set to redefine data annotation techniques. The incorporation of LLM embeddings allows for a deeper understanding and processing of diverse data types, meeting the growing customer demand for higher reliability and precision in AI task execution.

Enhanced Focus on Data Security and Privacy

In an era marked by a growing number of data breaches and stern regulatory overviews, strengthening LLM security is non-negotiable. Data annotation processes, thus, have a pressing need to bolster security and privacy measures in place. To safeguard against breaches, data annotation tool providers are adopting stringent encryption protocols, optimizing access controls, and actively ensuring compliance with data protection standards like GDPR and CCPA.

Conclusion

In conclusion, data annotators are instrumental in the burgeoning field of AI, particularly in large language models. They serve as a vital bridge, turning raw, unstructured data into machine-readable information, which is the lifeblood of functional AI models.

Their importance transcends the realm of technology, reaching into the ethical domain where they play a critical role in controlling AI bias and ensuring privacy. Their work in enterprises involves tailoring AI models to be industry, domain, and organization-specific, contributing to more intelligent workflows.

Despite the cost involved in hiring data annotators, their contribution in terms of accuracy, precision, and relevance to machine learning models is invaluable, making them a vital investment for any organization aiming to maximize the potential of AI.