AI Trends on the Timeline

One of the major AI trends is the astonishing progress in understanding linguistic capabilities, the brain, and even consciousness itself. Large Language Models (LLMs) trained on gargantuan volumes of human-generated language are a prime example. These models now yield fluent human-emulating responses, causing wonder and sometimes fear.

Let’s get started with a few questions. How much true intelligence resides in today’s generative AI? And how should we ensure its trustworthiness? What do LLMs do and how do those capabilities relate to what humans consider our own “intellectual property,”— that arising from a ‘real’ brain?

In this article, we will answer more questions: will our efforts to grasp the essence of generative AI compel us to revise our definition of intelligence and understanding? And, from a commercial perspective, will generative AI fulfill its potential for delivering enduring value to users and vendors?

As generative AI technology gains momentum in everyday workflows, it will influence and guide the future of AI tools. A recent IBM survey of over 1,000 employees at enterprise-scale companies shows the top three factors driving AI adoption to be:

1) advances in generative AI tools that make them more accessible;

2) cost reduction factors; and

3) the ability to automate key processes.

In that case, we can expect an increased rate of AI integration into standard off-the-shelf business applications.

Advancements in AI Technologies

Since 1956, when the term “artificial intelligence” was first used by John McCarthy, a Dartmouth College professor, we have witnessed numerous advancements in computer vision and innovations in data science. The following paragraphs will highlight the trending AI topics from the past few years. It is crucial to ensure transparency, fairness, ethics, and compliance when implementing AI, carefully vetting training data and algorithms for bias, and considering controls and AI regulations alongside experimentation.

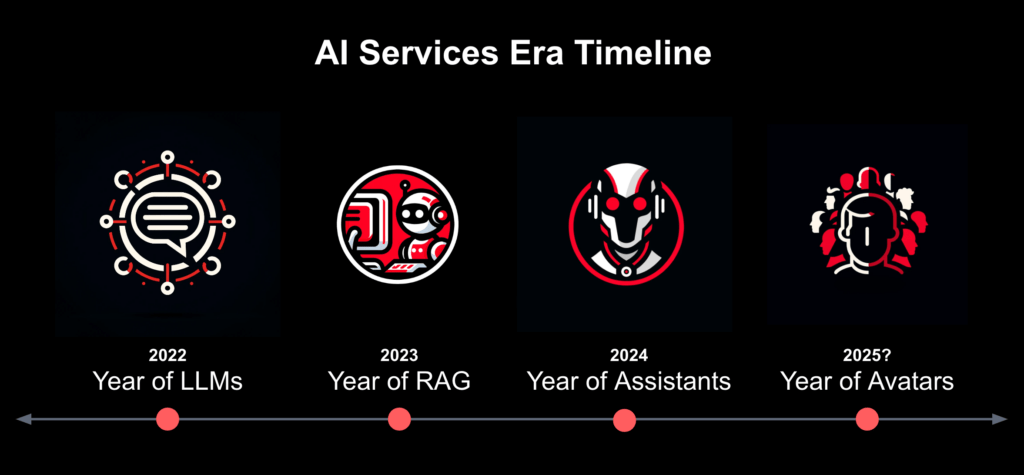

AI Trends on a Timeline

2023 was a year of almost Beatlemania-level enthusiasm about how to get started with generative AI and the steady forward march of LLMs. As 2024 spins down, businesses are increasingly enthusiastic about integrating it into internal workflows and customer-facing applications.

LLMs on the AI Trend Line

Given this vortex of excitement and anticipation, developers are seeking techniques to expand LLM performance in domain-specific tasks. These initiatives are loosely termed “fine-tuning”—but that sometimes encompasses techniques that don’t truly update LLM weights. Fine-tuning LLMs, however, time- and resource-intensive, pushes developers to tailor LLMs for domain-specific tasks through prompt engineering (crafting high-quality inputs).

RAG on the AI Trend Line

Retrieval-augmented generation (RAG) is a prompt-driven engineering tactic—an intermediate step between a user’s submission of a prompt and the LLM’s subsequent response with output. For example, an LLM-based application seeks and offers additional information of greater relevance and higher quality that was not part of its training data. A RAG-enabled application, without retraining, may offer more relevant outputs, and—importantly—fewer hallucinations.

It’s not difficult to foresee that Agentic RAG adoption will grow throughout and beyond 2024. However, when evaluating the suitability of an RAG-enabled application for a given use case, businesses should consider the sources from which the application retrieves data.

When comparing RAG vs fine-tuning LLM, RAG provides relevant outputs without retraining, while fine-tuning updates model weights with domain-specific data.

Small(er) language models and open-source advancements

When analyzing domain-specific LLMs, it assumes a point of diminishing returns from larger parameter counts. Although massive models launched an AI phenomenon, they have their drawbacks. Only mammoth enterprises possess the funds and server space to train and maintain energy-ravenous models with hundreds of billions of parameters. One estimate from the University of Washington speculates that a standard day of ChatGPT queries approaches the daily energy consumption of 33,000 U.S. households.

Small language models (SLMs), on the other hand, are far less resource-hungry. Training smaller models on more data yields superior performance than training larger models on fewer data. Much LLM innovation therefore focuses on obtaining greater output from fewer parameters. Recent belief is that models can be downsized without much performance sacrifice.

Three Important Benefits of Small Language Models

1- Smaller models help democratize AI: they can run at lower cost on more attainable hardware, empowering more amateurs and institutions to study, train, and improve existing models.

2- They can be run locally on smaller devices, This allows more sophisticated AI in scenarios like edge computing and the Internet of Things (IoT). Furthermore, running models locally—like on a user’s smartphone—helps to sidestep privacy and cybersecurity concerns that arise from interaction with sensitive personal or proprietary data.

3- They make AI more explainable: the larger the model, the more difficult it is to pinpoint how and where it makes important decisions. Explainable AI is essential to understanding, improving, and trusting the output of AI systems

Trends in Hardware and AI Advancements

The rapid evolution of AI has driven significant advancements in hardware. Enhanced CPUs, GPUs, and specialized AI chips like TPUs are meeting growing computational demands. Edge computing is also gaining traction, enabling faster processing and enhanced privacy. Read on to explore how these innovations are shaping the future of AI applications.

Central Processing Unit (CPU)

CPUs employ a sequential computing method, issuing one instruction at a time. Subsequent instructions must await the completion of their predecessors. In contrast, AI chips harness parallel computing to execute numerous calculations simultaneously. Such parallel computing is a dimension-swifter and more efficient.

At its Ignite developers’ conference, Microsoft debuted chips designed specifically to execute AI computing tasks. Qualcomm and MediaTek also offer on-device generative AI capabilities through coming chipsets for flagship and mid-range smartphones. So conventional CPUs are eclipsed by specialized processing units optimized for executing AI models. Next-generation chipsets are even evolving to integrate on-device generative AI capabilities

GPU shortages and cloud costs

The trend toward smaller models is driven by both necessity and business ambition, as cloud computing costs increase while hardware availability decreases. There is huge pressure not only for increased GPU production but also for innovative hardware solutions that are cheaper and easier to make and use. Cloud providers currently bear much of the computing burden: relatively few AI adopters maintain their own infrastructure, and hardware shortages will raise hurdles and costs of setting up on-premise servers. This may put upward pressure on cloud costs as providers update and optimize their own infrastructure to meet generative AI demands.

Model optimization is growing more accessible

Maximizing the performance of more compact models is a recent trend, stimulated by the open-source community. Many advances are driven not just by new foundation models, but by new techniques and resources—such as open-source datasets—for training, tweaking, fine-tuning, or aligning pre-trained models. Recently notable model-agnostic techniques include Low-Rank Adaptation (LoRA), Quantization, and Direct Preference Optimization (DPO). These advances shift the AI landscape by providing startups and amateurs with sophisticated AI capabilities they could previously only dream of.

Customizations and Localized Solutions

Enterprises in 2024 can differentiate themselves through bespoke model development, rather than building wrappers around repackaged services from “Big AI.” Now, with the right data and development framework, existing open-source AI models and tools can fit into almost any real-world scenario—customer support, supply chain, project management, and even complex document analysis

Open-source models enable organizations to develop powerful custom AI models, trained on their proprietary data and fine-tuned for their specific needs. They do this quickly, and without requiring prohibitively expensive infrastructure investments. This benefits industries like leveraging generative AI in banking, and insurance, and using large language models in healthcare, where highly specialized vocabulary and concepts may not have been learned by foundation models in pre-training. As 2024 continues to level the model playing field, competitive advantage will increasingly be driven by proprietary data pipelines that enable superb fine-tuning.

Increasing Power and Reach of AI Agents

With sophisticated, efficient tools and bounteous market feedback, businesses and big tech companies are primed to expand AI agents’ use cases beyond mere customer experience chatbots. As AI systems speed up and incorporate new streams and formats of information, they expand the possibilities for not just communication and instruction-following, but also task automation. Today, we are seeing AI agents actually address and complete customer needs—make reservations, plan a trip, and connect to other services.

This groundbreaking advancement in artificial intelligence combines various techniques, models, and approaches to empower a new breed of autonomous agents. These agents can analyze data, set goals, and take action to achieve them—all with minimal human supervision. Agentic AI enables these autonomous agents to exhibit near-human cognition in many areas, transforming them into problem-solving machines that excel in dynamic environments. They continuously learn and improve with every interaction, making them invaluable assets for businesses looking to enhance efficiency and adaptability.

AI in Commerce Essential Use Cases for B2B and B2C

Integration of AI in commerce depends on trust from customers in data, security, brand, and those behind the AI. Four use cases are already transforming the customer journey of AI in commerce. Now brands can create seamless, personalized buying experiences that increase customer loyalty, customer engagement, retention, and wallet share across B2B and B2C channels.

Use case 1: AI for Modernization and Business Model Expansion

AI-powered tools help optimize and modernize business operations throughout the customer journey. Using machine learning algorithms and big data analytics, AI can uncover patterns, correlations, and trends that might dodge human analysts. This information helps businesses make informed decisions, improve operational efficiencies, and identify growth opportunities, offering customers seamless, personalized shopping experiences.

Use case 2: AI for Dynamic Product Experience Management (PXM)

The power of AI enables brands to transform their product experience management and user experience by delivering personalized, engaging, and seamless experiences at every commerce touchpoint. These tools can manage content, standardize product information, and drive personalization. With AI, brands can inform, validate, and build the confidence needed for conversion.

Generative AI can automate the creation, classification data analysis, and optimization of product content, creating new content tailored to individual customers—product descriptions, images, videos, and even interactive experiences.

Use case 3: AI for Order Intelligence

With generative AI and automation, businesses can make data-driven decisions to streamline processes across the supply chain, reducing inefficiency and waste. Inadvertent errors can cost as much as $95 billion in annual losses in the US. AI-powered order management systems provide real-time visibility into all aspects of the critical order management workflow, enabling companies to proactively identify potential disruptions and mitigate risks, ensuring orders will be delivered exactly as promised.

Use case 4: AI for Payments and Security

Intelligent payments are welcome in the payment and security process, improving efficiency, confidence, and accuracy. Such technologies process, manage, and secure digital transactions—and provide advance warning of potential risks and fraud. The tools they use include intelligent payments for B2C and B2B customers making purchases in online stores.

Traditional AI optimizes POS systems, automates new payment methods, and facilitates multiple payment solutions across channels. Generative AI creates dynamic payment models for B2B customers while addressing their complex transactions with customized invoicing and predictive behaviors. They offer risk management and fraud detection, plus compliance and data privacy.

Expect Multimodal AI Expansions

The next wave of advancements focuses not only on enhancing performance within a specific domain but on multimodal models that can take many types of data as input.

Multimodal AI increases opportunities for seamless interaction with virtual agents. Multimodal AI users can, for example, enquire about an image and receive a natural language answer, or ask aloud for instructions to repair something and receive visual aids alongside step-by-step text instructions! Multimodal AI allows a model to process more diverse data inputs, enriching and expanding information for training and inference. Video, in particular, offers great potential for holistic learning.

Top Generative AI Trends for 2024

Artificial intelligence and data science became front-page news in 2023. The rise of generative AI, of course, drove this dramatic surge in visibility. So, what might happen in the field in 2024 that will keep it on the front page? And how will these trends really affect businesses?

AI for Creativity

A major advantage of generative AI is that it augments human creativity and overcomes the democratization of innovation. Humans unquestionably show limitless creativity. However, communicating these innovations in written or visual form restricts many from contributing and publicizing revolutionary new ideas. Generative AI can remove this obstacle. Those with vested interests in traditional ways, or who fear being rendered obsolete—will resist.

However, these will usually be swept aside by novel ideas from both inside and outside the organization. Generative AI’s potential lies not in “replacing” humans but rather in supporting their individual and collective efforts. Though creatives must watch for over-reliance on AI tools, the benefits of boosting intellectual curiosity and overcoming mental blocks overshadow that risk.

Generative AI for Hyperpersonalization

Generative AI can take personalization to the next level by creating customized experiences tailored to individual customers. Analyzing customer data and customer queries, it creates personalized product recommendations, offers, and content that are stronger in driving conversions. Personalization is increasingly critical as organizations adopt more software-as-a-service (SaaS) models. Global subscription-model billing is expected to double over the next six years, and most consumers say those models help them feel more connected to a business. AI’s potential for hyperpersonalization can deliver higher engagement, increased customer satisfaction, and ultimately, higher sales.

Conversational AI

Today, people don’t just demand or prefer instant communication; they expect it. Conversational AI is at the forefront of breaking down barriers between businesses and their audiences. This class of AI-based tools, including chatbots and virtual assistants, enriches seamless, human-like, and personalized exchanges. As Natural Language Processing (NLP) translates the user’s words into machine actions, it enables machines to understand and respond to customer inquiries with awesome accuracy.

According to Allied market research, the conversational AI market is projected to reach USD 32.6 billion by 2030. This proves that customer service is more critical than ever. By incorporating speech recognition, sentiment analysis, and dialogue management, conversational AI can respond more accurately to customer needs.

Generative AI for Scientific Research

Generative AI is an exciting part of the new educational landscape, helping scholars save substantial time and effort in tedious parts of the research process. Effective prompt engineering smoothes interactions and allows more nuanced and critical responses. Understanding the tool’s limitations keeps expectations in check.

As always, there is the persistent threat of AI hallucinations and biases. In the past few years, the role of generative AI in drug discovery has been spectacular. The best way to gauge the limitations of a tool is to practice using it and critically search outputs for hallucinations, biases, and limited information.

Humans in the Generative AI Loop

Generative AI’s rise means that human oversight is more critical than ever to protect enterprise assets. Leaders can’t ignore this reality: as much as generative AI applications can augment or even reduce workloads, they require more human intervention than many enterprise applications programmed with linear business logic. By prioritizing employee training and education, and keeping humans in the loop, organizations can be surer of reaching generative AI’s promised productivity gains.

But how does the human-in-the-loop relationship work for enterprises? It varies per use case, but in general requires that employees verify that any content created with Gen AI services is accurate, relevant, and ethical.

Open Source Wave in Generative AI

With generative AI models—as with other software— “open-source” means that the source code is publicly available, with anyone free to examine, modify, and distribute it. Proponents insist that it promotes innovation and collaboration as developers can build on previous work. It also enables customizing and fine-tuning of existing tools and models for specific applications. For generative AI security, open-source models can be externally audited; developers can audit and spot flaws. Ethical AI champions say open source is more transparent and understandable than closed-source models.

Closed-source or “proprietary” generative AI is effectively private property allowed to be licensed. Owners say if everyone knew how it works, they’d be able to re-create it and sell it (or give it away) themselves. There may also be security advantages to choosing closed-source models whose vendors ensure their models don’t leak data or allow unauthorized access. Deciding between open and closed-source calls for deep considerations, including budget, technical expertise, and the cost and local availability of third-party support.

Gen AI Adhering to Strong Regulatory Guidelines

The proliferation of generative AI comes with risks. Key to these are how models and systems are developed and how the technology is used. People are concerned about a potential lack of transparency in how systems function, the data used to train them, issues of bias and fairness, potential infringements on intellectual property, privacy violations, third-party risk, and security concerns.

Add to these error-riddled or manipulated outputs, harmful or malicious content—and fears reach fever pitch. No wonder regulators are seeking to mitigate potential harms and establish legal certainty for companies engaged in developing or using Generative AI. The goal is to establish harmonized international regulatory standards that would stimulate international trade and data transfers. To do this, a consensus has been reached: the generative AI and software development community itself is advocating for regulatory control over the technology’s development ASAP.

Bring your Own AI

Artificial intelligence (AI) offers massive opportunities for organizations: boosting productivity, reducing operational costs, and finding ways to deliver better customer service while spurring improved business outcomes. Fear, uncertainty, and doubt persist, though, as to how people and infrastructure can benefit while avoiding poor implementation, security risks, or unethical use. Depending on your LLM strategy, for some, the “bring your own AI” (BYOAI) or LLM approach can make AI deployment a more controllable opportunity.

AI Augmentation Apps and Services

Two major approaches—augmentation and artificiality—shape technological progress. Augmentation enriches existing capabilities by integrating improved or innovative technology, Artificiality creates new possibilities, superseding previous limitations. Both approaches are promising for improving human work and life, but also risk threats if misused. Leaders must ensure that these emerging technologies benefit people, both in and out of the workplace as they are developed and released.

AI Trends in Ethical Considerations and Regulatory Landscape

Elevated multimodal capabilities and lowered barriers to entry also open up new doors for abuse: deepfakes, privacy issues, bias, and evasion of CAPTCHA safeguards may become increasingly easy for bad actors.

The majority of groundbreaking AI development is happening in America, where legislation of AI in the private sector will require action from Congress—in an election year. However, the administration has secured voluntary commitments from prominent business leaders and AI developers to adhere to certain guardrails for trust and security.

The EU AI Act, on the other hand, sets a comprehensive regulatory framework for AI, particularly focusing on generative AI. It imposes strict obligations for high-risk systems, including transparency requirements and accountability measures.

Noncompliance with the AI Act can lead to significant penalties, highlighting the EU’s proactive stance compared to the lack of comprehensive federal legislation in the U.S. The AI Act also draws parallels with GDPR in terms of its rigorous approach to data protection and privacy.

AI Regulation, Copyright, and Ethical AI Concerns

The role of copyrighted material in training AI models used for content generation—from language models to image generators and video models—remains a contested issue with significant ramifications for the evolving landscape of AI regulation.

The outcome of the high-profile lawsuit filed by the New York Times against OpenAI may significantly affect the direction taken by AI legislation.

Shadow AI (and Corporate AI Policies)

For businesses, this escalating potential for legal, regulatory, economic, or reputational consequences is compounded by how popular and accessible generative AI tools have become. Organizations must have a careful, coherent, and clearly articulated corporate policy around generative AI, plus beware of shadow AI.

“Shadow IT” or “BYOAI,” is most common when impatient employees racing for solutions (or simply curious to explore new tech faster) implement generative AI in the workplace without going through IT for approval or oversight.

In one study from Ernst & Young, 90% of respondents said they use AI at work. This underscores how the dangers of generative AI rise with its capabilities.

Future of Artificial Intelligence

As we venture into the evolving landscape of artificial intelligence, this section examines pivotal developments that could dramatically reshape the future of AI technologies and applications.

How will Quantum Computing Impact Generative AI?

Quantum computing is a new trend in computer science that employs quantum mechanics to perform complex calculations at unprecedented speed and scale. It is transforming AI and machine learning, with potential to reshape the landscape of AI-generated content and creativity.

Unlike classical computers, which process information in binary bits (0s and 1s), quantum computers utilize quantum bits, or qubits, which can exist in multiple states simultaneously through the phenomena of superposition and entanglement.

Quantum computing can accelerate the training and optimization of AI models almost beyond belief. While traditional deep learning algorithms require massive computational resources and time to train complex neural networks, quantum computing speeds this process by exploring huge solution spaces effortlessly.

Generative AI models can inhabit a much larger solution space and comprehend more complex patterns and relationships within data. AI algorithms can generate more sophisticated, creative outputs across various domains. At this time, though, quantum computing hardware is still in rather early phases, so advances will be needed for it to reach fuller potential.

As quantum-powered AI systems take their place at the lead, clear regulatory frameworks and ethical guidelines must be in place to control potential risks, threats, and biases. New standards of ethical stewardship will be essential.

AI Avatars are the Future of AI applications

AI avatars have become the new AI trend and will grow very fast in the near future. They are poised to revolutionize digital interactions by bridging the gap between traditional, text-based chatbots and human-like communication.

Unlike chatbots that rely solely on text and predefined scripts, AI avatars can engage in fluid, dynamic dialogues using facial expressions, gestures, and tone of voice. This capability allows for more personalized and empathetic interactions, making users feel understood and valued.

In business environments, AI avatars can greet visitors, provide detailed information, and streamline internal processes, enhancing both customer experience and operational efficiency. As these digital ambassadors continue to evolve, their ability to adapt to specific customer needs and preferences will further solidify their role as indispensable tools in the future of AI applications.

Conclusion

As AI reaches a pivotal year, adaptation is crucial for businesses. To harness Generative AI’s potential, responsible scaling, and ethical considerations are necessary. Advanced tools like enterprise AI Copilot and AiseraGPT, our fine-tuned large language models (LLMs), empower enterprises by considering ethical aspects like the TRAPS (trusted, responsible, auditable, private & secure) framework. Discover the future of AI today by booking a custom Gen AI demo to explore its capabilities!