SLM Agents in Agentic AI Systems

The rise of agentic AI and agentic applications is redefining productivity by enabling autonomous systems to carry out specialized tasks with greater efficiency. As AI evolves from passive applications to proactive agents capable of planning, reasoning, and tool usage, the shortcomings of earlier approaches become more visible.

Large Language Models (LLMs) pioneered this paradigm, powering AI agents with broad, near-human general-purpose conversational abilities and versatility across a wide range of tasks. However, their infrastructure demands often along with infrastructure costs and high energy consumption, make them too costly and complex for many specialized, high-frequency workflows.

This is where Small Language Models (SLMs) emerge as a practical and powerful alternative. Purpose-built for efficiency, SLMs are designed to excel in targeted roles such as API orchestration, data formatting, and structured task execution – delivering high performance without the computational overhead of their larger counterparts.

Unlike frontier models that require massive resources and a lot of computing power, SLMs offer lower latency, lower cost, and rapid fine-tuning for domain-specific needs. Their growing adoption signals a fundamental shift: moving away from equating capability with size, and toward optimizing for the best – and most economical – solution for each agentic task.

In this article, we will explore why small language models are the future of agentic AI systems.

Benefits of Small Language Models in Agentic System

-

Latency, cost, and energy efficiency

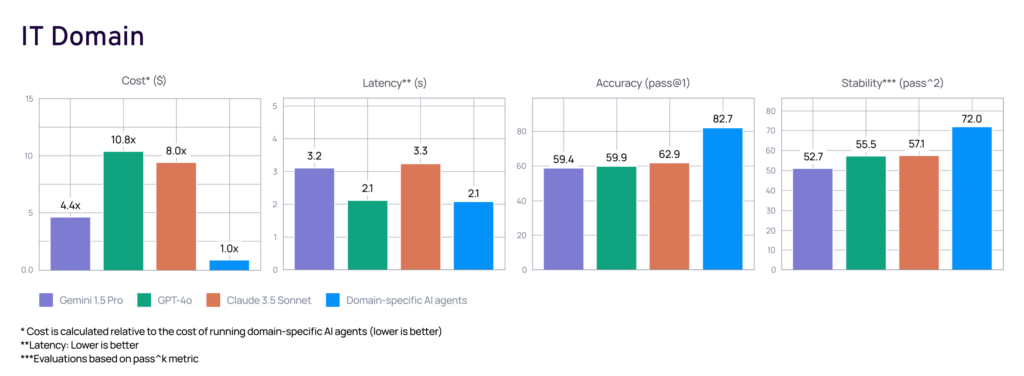

Smaller parameter counts result in faster inference, lower GPU/CPU memory pressure, and significantly reduced serving costs, which are critical for real-time agents and edge device deployment. In present-day AI systems, optimizing AI infrastructure and computing power is essential for efficient and cost-effective deployment, especially as demand for scalable agentic AI solutions grows.

-

Predictability and schema adherence

Agents frequently need deterministic tool-calling: function signatures, JSON schemas, SQL templates. SLMs fine-tuned on narrow schemas often emit cleaner, more constrained outputs, reducing brittle post-processing and retries. The broader ecosystem’s tool-calling advances (e.g., Llama 3.1’s native function calling) illustrate how smaller models can be made reliably schema-obedient for agent orchestration.

-

Domain specialization

Because they’re cheaper to (re)train, SLMs can be rapidly adapted to verticals and specific tasks – finance controls, ITSM runbooks, or device-level assistants – without carrying the cost of keeping a general-purpose model hot. SLMs are sufficiently powerful to perform specialized tasks repetitively, especially in domains with little variation, making them ideal for focused applications where efficiency and reliability are critical.

Technical reports for families like Microsoft’s Phi-3 and Google’s Gemma 2 show how compact models, trained with careful data curation and post-training, can rival foundation models on practical tasks and specialized tasks repetitively while remaining deployable on phones or single GPUs.

-

Heterogeneous architectures

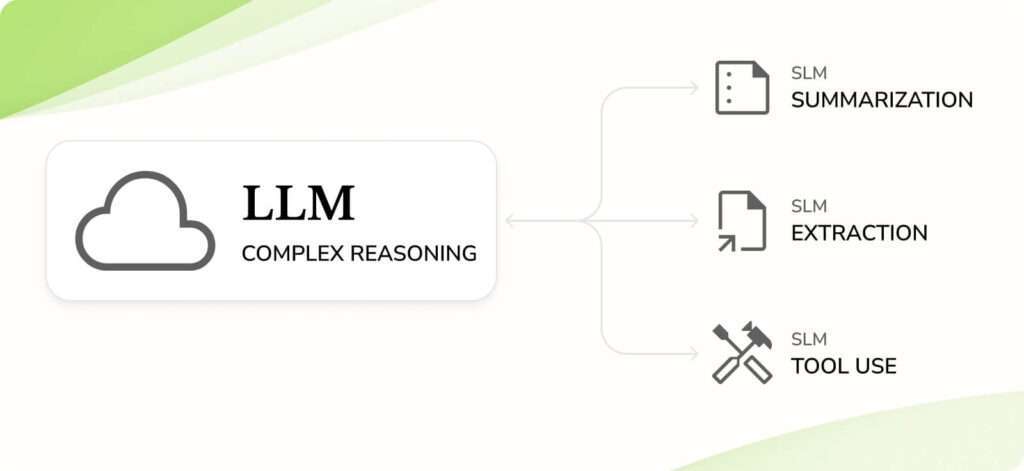

The pragmatic pattern is to use heterogeneous agentic systems that leverage multiple different models: SLM-first, LLM-as-fallback. This should be the natural choice for most of the enterprises. In these systems, agents invoke multiple models – such as small language models (SLMs) for routine steps (classification, routing, tool wiring – and escalate to large language models only when complexity spikes (ambiguous inputs, novel reasoning), enabling efficient handling of many invocations and optimizing invocations in agentic systems. Nvidia explicitly recommends such heterogeneous agent pipelines to balance cost and capability.

This approach is the natural choice for agentic AI, as it integrates multiple different models within a single system, providing flexibility, efficiency, and adaptability.

-

Accessibility and sovereignty

Compact models enable on-prem or on-device deployment for privacy, compliance, or sovereignty mandates. The broader trend toward capable “small”/“open-weight” models (across industry and research) makes it feasible to keep data local while still delivering strong agent performance.

Here is a quick comparison between agents built on LLMs vs those built on SLMs

| Dimensions | Agents on LLM | Agents on SLM |

|---|---|---|

| Performance Scope | Broad, general-purpose reasoning across open-ended tasks and diverse domains | Strong for narrow, repetitive, and structured tasks; weaker for open-ended reasoning |

| Latency | Higher due to large parameter counts and heavy compute needs | Lower latency, faster responses, suitable for real-time and edge scenarios |

| Cost of Deployment | Expensive to serve and maintain; requires GPUs/TPUs and cloud-scale infrastructure | Significantly cheaper to run; feasible on single GPUs, CPUs, or even mobile/edge devices |

| Predictability / Schema Adherence | More prone to “hallucination” or drifting outside the schema in tool calls | More predictable and controllable, especially after fine-tuning for schema compliance |

| Domain Specialization | Adaptation is costly; fine-tuning requires significant data and computing | Easy to fine-tune for specific domains (IT, HR, finance, IoT) with smaller datasets |

| Architecture Fit | Suited for monolithic or fallback roles; excels at complex reasoning and creativity | Suited for modular/multi-agent systems where multiple small specialists collaborate |

| Best Use Cases | Exploratory reasoning, novel problem-solving, open-ended dialogue | Task routing, API orchestration, classification, structured workflows, edge deployments |

Application of Small Language Models in Agentic AI Systems

Where are SLMs already useful (or ideally suited) in agentic systems? In many common architectures for agentic AI, small models are integrated to handle specialized tasks repetitively. In these settings, small language models perform well by providing reliable, cost-effective solutions, especially when compared to larger models. Here are several kinds of tasks and deployment settings:

- Routine / Repetitive Subtasks: For example, agents that parse user input, classify or tag, perform simple summarization, or check for errors. These subtasks are narrow and stable, and SLMs can excel here.

- Tool Orchestration & Interface Wrapping: Agents that need to translate user intents into structured tool calls (APIs, queries, commands) benefit from smaller models that reliably produce structured output with minimal noise. Using an SLM for schema enforcement or for mapping natural language to API calls is more predictable.

- On-device / Edge Scenarios: Smart assistants on phones, IoT devices, embedded controllers, etc., where bandwidth, power, and memory are limited. SLMs or distilled models make deployment feasible in such constrained settings.

- Multi-agent Systems: When you have multi-agent systems, each responsible for a subtask, SLMs can make these agents lightweight so the whole system is more efficient. Also useful in hierarchical or distributed agent workflows.

- Hybrid & Heterogeneous Architectures: Systems that combine small and large models: use SLMs for core predictable tasks, reserve LLMs for fallback, creative reasoning, or when things go out of scope for SLMs. This approach balances cost and capability. The Nvidia work explicitly recommends this structure.

- Domain-specific Agents / Enterprises: Domain-specific agents tailored to particular industries (healthcare, legal, finance) where the vocabulary, rules, and risk profile are constrained and known. SLMs can be trained or fine‐tuned to operate safely and reliably in those domains.

Academic Research of SLMs for AI Agents

Nvidia’s SLM position (2025)

Belcak et al. synthesize three pillars: (i) observed SLM capability on agent-like subtasks; (ii) agent architecture realities (tool use, constrained outputs, repetition); and (iii) the economics of deployment. They also sketch an LLM-to-SLM conversion algorithm, identifying which agent components can be safely down-scaled and how to structure escalation to larger models.

Compact models with outsized utility

Microsoft’s Phi-3 shows that a 3.8B model trained on a carefully filtered, curriculum-style corpus achieves strong scores on general benchmarks while being small enough for mobile inference. This is evidence that data quality and post-training can narrow the gap with much larger systems for many agent subtasks. Gemma 2 extends this: practical-size models paired with robust safety and post-training recipes reach competitive performance within tight resource envelopes.

Evaluation as agents, not just chat models

AgentBench (ICLR 2024) evaluates models in interactive environments – web navigation, tool use, and code tasks – highlighting factors like schema discipline, error recovery, and decision-making under partial information. AI agent evaluation results across environments suggest that smart training and scaffolding can let smaller models deliver competitive outcomes on well-scoped agent tasks, especially when paired with retrieval, tools, and verifiers

Ecosystem momentum

Beyond academic work, industry releases continue to push “small-but-capable” AI reasoning models and agent tools. Even as mega-models grab headlines, the emergence of efficient, open-weight options and sovereign initiatives underscores a shift toward right-sized models optimized for specific agent roles.

Future of Agentic AI with Small Language Models

The next era of agentic AI will not be defined by ever-bigger models, but by the intelligent deployment of Small Language Models as the workhorses of everyday automation. SLMs are emerging as the default engines because they are sufficiently powerful for tasks that demand speed, predictability, and efficiency – routing, tool orchestration, schema enforcement, and domain-specific workflows. Their compact design enables real-time responses, on-device deployment, and rapid fine-tuning, making them uniquely suited to the operational realities of enterprises.

Large models will remain indispensable, but increasingly as escalation layers – reserved for ambiguous or novel cases where deep reasoning and broad generality are essential. This hybrid paradigm ensures that organizations gain the best of both worlds: systems that are fast, affordable, and sustainable, without losing access to advanced reasoning when it is truly needed.