What is AI Agent Evaluation?

AI agent evaluation is the systematic process of assessing the performance, reliability, and safety of Agentic AI. Unlike standard LLM testing, agent evaluation focuses on decision-making capabilities, including how accurately an agent plans workflows, executes tasks, and interacts with enterprise environments.

Effective evaluation is critical because autonomous agents operate without constant human oversight. To ensure they serve organizational goals, they must be tested against strict metrics for efficiency, ethical alignment, and operational safety.

The CLASSic Evaluation Framework

To address the complexity of agentic workflows, we utilize the CLASSic Framework, a comprehensive benchmarking standard for enterprise AI. This framework evaluates agents across five core dimensions:

- Cost: Measuring token usage and inference expenses per task.

- Latency: Tracking the speed of response and action execution.

- Accuracy: Verifying the correctness of the agent’s logic and final output.

- Security: Ensuring adherence to data privacy and access controls.

- Stability: Testing for consistent performance over time and across variable inputs.

Our vision for CLASSic is to provide decision-makers with a tangible benchmark to ensure emerging AI solutions deliver maximum ROI with Agentic AI.

An Introduction to Agent Evaluation Process

In today’s fast-evolving technological landscape, artificial intelligence (AI) agents have become essential for enterprises seeking to enhance efficiency, customer satisfaction, and decision-making. Unlike basic automation systems, AI agents operate autonomously or semi-autonomously (i.e., “human-in-the-loop”), allowing them to perform complex enterprise tasks.

Current agentic AI systems rely on many submodules such as Planning, Reflection, Execution, and Learning/Reinforcement. Given these broad capabilities, AI and large language model (LLM) evaluation requires an equally extensive framework covering the metrics that matter to enterprises, and this is where structured agent development plays a crucial role.

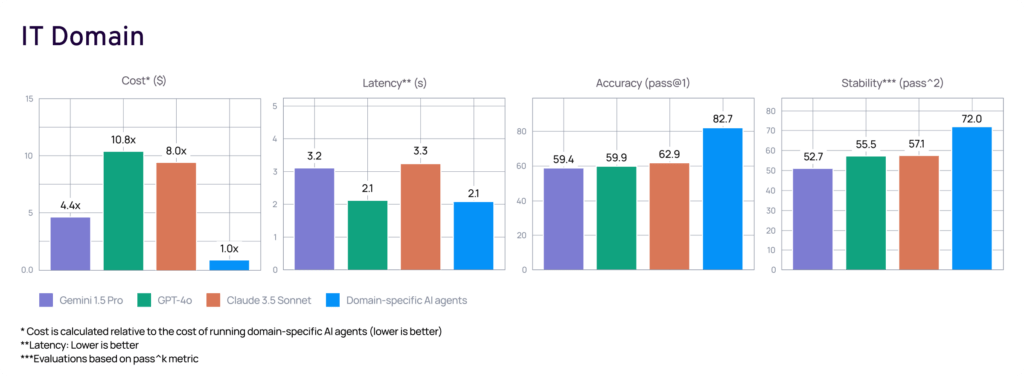

In the chart below, you see real agent evaluation results, showing domain-specific AI agents outperform Claude and GPT-4o Gemini in IT operations. Download the full benchmark report.

Why a Benchmarking Framework is Essential

Traditional agent benchmarks focus narrowly on accuracy metrics such as end-to-end task completion rates. This, however, misses the broader picture of enterprise needs such as affordability, security, reliability, and stability. Across hundreds of customers where Aisera’s AI agents are deployed, we have seen a very strong need to ensure that the agent performs at the highest level of security.

Customers such as Carta and Dave, who operate in the financial and banking domain, exemplify the paramount importance of such high standards. Ensuring unparalleled reliability and stability is also a primary objective for many critical customers like Gap and NJ Transit. This is reflected in the evaluation results we obtain from our comprehensive benchmarking framework.

This makes it difficult for enterprises to evaluate the ability of AI agents to execute in sensitive and demanding environments. This is reflected in the evaluation results we obtain from our comprehensive benchmarking framework.

As agent frameworks grow more sophisticated, they will handle not only simple conversational tasks but also complex business processes. Evaluating agents on multiple dimensions—including how they handle real-time adjustments and learn is essential for unlocking their full value for enterprises.

Drawing from our conversations with customers, our proposed CLASSic framework aims to address these needs by providing a holistic view of Agentic AI, covering the most important facets of their performance, and establishing a first-of-its-kind benchmark for enterprise agents.

Discover why domain-specific AI agents outperform general models.

Watch the video for real-world agent evaluation

Agent Performance Metrics (CLASSic Framework Approach)

Agent metrics are key to evaluating agent performance. These metrics can be broken down into three main areas: accuracy, response time, and cost. Accuracy metrics measure the agent’s ability to give correct answers, so the agent can do what it’s supposed to do. For example, metrics like pass^k can measure an agent’s reliability by seeing how many times it can complete a task.

Response time metrics measure the agent’s speed in responding to user queries. Faster response times = better user experience and operational efficiency.

Cost metrics look at the total cost of running the agent, including token costs and other expenses. By tracking these metrics, developers can see where to improve and optimise their agents for better performance. For example, knowing the token cost per task can help manage overall operational costs and be cost-effective.

By looking at these metrics, developers can see what the agent can do, where the quality issues are, and make adjustments to improve the agent overall.

The CLASSic Framework Explained

1. Cost: Optimizing Resource Utilization

An AI agent, especially when operating autonomously, comes with varying costs depending on the complexity of its planning and execution processes. Thus, it is crucial to consider the Total Cost of Ownership (TCO), including infrastructure, operational expenses, and scalability.

– Planning & Execution Costs: An intelligent agent that decomposes tasks into sub-tasks (e.g., via Chain of Thought) may incur higher planning costs but can optimize execution by using specialized sub-agents.

– Improvement Costs: As agents iterate and improve over time, there are costs associated with memory management and model retraining.

Key Cost Metrics:

- Infrastructure and Operational Costs: How much does it cost to maintain the agent, including hardware and human resources?

- Scalability Costs: The cost of scaling agents for more complex or larger workloads.

- Cost per Task: The overall cost to the enterprise per completed task or autonomous execution.

2. Latency: Ensuring Timely Responses

For AI agents, latency isn’t just about immediate responses; it’s about how quickly an agent can plan, execute, reflect, and iterate when completing complex workflows. In real-world scenarios, agents must not only provide answers but also adjust their execution based on real-time data and course-correct if needed.

– Planning & Execution Latency: The time it takes for an agent to break down tasks and execute them using tools, sub-agents, or available data sources.

– Reflection/Iteration Time: The time the AI agent takes to review its execution trajectory and correct mistakes.

Key Latency Metrics:

- Time to First Response: The time it takes for an agent to start planning or executing a task.

- Reflection Latency: The time spent re-evaluating steps or refining outputs after receiving feedback or encountering new information.

- Throughput: The number of tasks an agent can complete in a given time frame.

3. Accuracy: Delivering Reliable Outputs

While accuracy is a traditional metric, agents must go beyond merely providing correct answers to user queries—they must accurately plan, adapt, and execute tasks. Their ability to learn and improve over time also plays a critical role in maintaining accuracy.

– Reflection and Iteration: Agents must assess their own outputs and improve upon them, leading to iterative gains in accuracy.

– Task Completion Rate: This measures whether the agent can plan and complete tasks autonomously.

Key Accuracy Metrics:

- Task Completion Rate: The ability to complete assigned tasks with correct outcomes.

- Step-level Accuracy: Number of actions the agent correctly takes within a larger workflow

- Precision and Recall: Assessing whether the agent can provide both relevant and comprehensive answers in task execution.

- Reflection Accuracy: How well the agent improves after iterating on its past actions.

4. Security: Protecting Sensitive Information

Security is paramount because AI agents often have access to sensitive data, especially in mission-critical systems. Security must account for human-agent interaction, ensuring that data exchanged during such interactions is protected and that any vulnerabilities are mitigated for both traditional data privacy issues and concerns unique to autonomous systems, such as data leakage during task execution or exploitation of vulnerabilities in sub-agent systems.

Security measures must also ensure compliance with company policies to maintain the reliability and performance of the deployed systems.

- Tool & Data Security: Agents may access external tools or resources, so these interactions must be secure.

- Model Integrity: Ensuring the agent’s planning and execution models are protected from malicious inputs or adversarial attacks.

Key Security Metrics:

- Threat Detection Rate: How well agents identify and respond to malicious user input during execution.

- Session Management: How securely agents manage data over multiple interactions or sessions.

5. Stability: Maintaining Consistent Performance

Stability is crucial for AI agents to ensure they can handle dynamic workloads and maintain performance even under heavy usage or changing conditions. Stable agents should be robust to user input and context variations so that the customer experience is well-controlled. They should also improve over time as they complete workflows.

- Consistency Across Executions: Stability measures how consistently an agent performs similar tasks over time. Agents with more sophisticated architectures have higher stability, ensuring that performance remains consistent across complex and dynamic environments.

- Error Rates in Execution: How often the agent’s planning or execution fails to produce the desired results.

Key Stability Metrics:

- Response Consistency: The uniformity of the agent’s performance over time.

- Execution Error Rate: The number of failures during task execution or reflection.

A Comparative Analysis of AI Agents

When enterprise AI Agent evaluation using the CLASSic framework, it’s essential to examine multiple evaluation metrics to assess agent performance fully. AI Agents vary significantly in their ability to break down tasks, use tools efficiently, and improve over time.

1. Cost: More complex agents tend to have higher initial setup and operational costs (e.g. due to more involved task planning, self-reflection, etc.) but may optimize long-term resource usage.

2. Latency: Agents that are able to quickly output the next action in a workflow can significantly reduce the latency of completing complex workflows.

3. Accuracy: Performance depends not only on the immediate next action predicted by an agent but also on how well the agent reflects on past actions and improves future performance.

4. Security: Agents using external tools or accessing sensitive data must have robust security protocols in place, especially during task execution.

5. Stability: Consistent task performance across different workloads and reliable behavior during reflective learning cycles ensure better stability.

Conclusion

This analysis underscores the importance of evaluating AI agents across multiple dimensions using the CLASSic framework. Agents capable of autonomous planning, execution, and reflection outperform traditional models when tailored to specific business needs. By applying diligent evaluations, organizations can select AI agents that align with their operational priorities, ensuring cost-efficiency, security, and stability while driving better business outcomes.

Schedule a custom AI demo or download the AI agent benchmark report today and discover how Aisera’s Agentic AI can transform your enterprise.