What is Agent2Agent Protocol (A2A)?

When people collaborate, the results are almost always better. Why shouldn’t the same apply to AI agents? That’s exactly what the Agent2Agent protocol is about standardizing agent communication. Think of agent-to-agent (A2A) as a common language that enables different AI agents, irrespective of their source or build, to communicate with and collaborate among themselves.

Just like how people from different walks of life and countries use English as a common language to work together, A2A gives AI agents a common protocol to exchange tasks, updates, and decisions.

Instead of every agent going off and doing its own thing independently, A2A allows them to act as a true team, sharing information, calling for help, or handing off tasks. It’s the foundation for building smart, multi-agent systems where the whole system is truly greater than the sum of the parts.

A is for Agent2Agent: Google's Agent Interoperability Initiative

Google’s Agent2Agent (A2A) protocol enters this landscape as an initiative to establish a “universal language for AI agents”. The stated objective is to enable agents, irrespective of their origin or underlying framework, to engage in substantive, collaborative dialogues. It aims to provide the linguistic and procedural toolkit for executing multi-agent workflows, where agents coordinate to complete complex tasks that a single agent can’t achieve.

Let’s take a look at the design principles:

- Agent-Centric Design: A2A aims to treat agents as autonomous entities with specialized intelligence, rather than mere functional endpoints. This distinction is important for fostering genuine multi-agent collaboration.

- Leveraging Existing Web Standards: The protocol utilizes HTTP, Server-Sent Events (SSE), and JSON-RPC. This approach aims to lower adoption barriers by building on familiar technological ground.

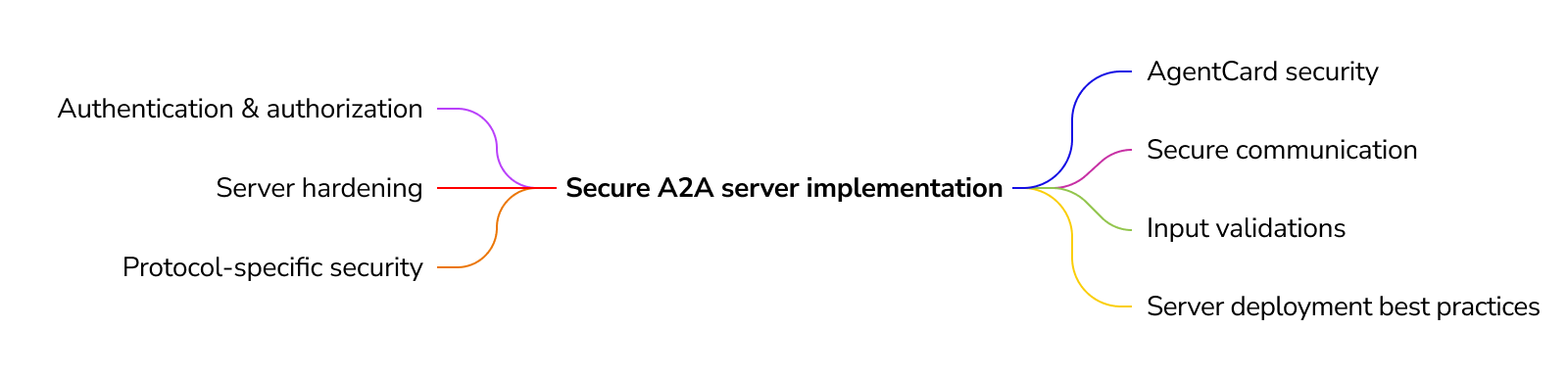

- Security as a Core Tenet: The protocol emphasizes “enterprise-grade authentication and authorization”. A2A demonstrates a strong intent to manage authentication via Agent Cards, TLS, and JWTs, alongside granular authorization. You can read it in their paper – Building A Secure Agentic AI Application Leveraging A2A Protocol.

- Support for Asynchronous, Long-Duration Tasks: Acknowledging that complex collaborative efforts are often not instantaneous, A2A is designed to manage interactions that “may take hours or even days”.

- Modality Agnosticism: The design anticipates a future beyond text, incorporating support for “various modalities, including audio and video streaming”.

In operational terms, A2A relies on:

- Agent Cards: These JSON files, typically published at https://<your-end-point>/.well-known/agent.json, serves as an agent’s discoverable profile, detailing its “capabilities, skills and authentication mechanisms”.

- Tasks: The “fundamental, stateful unit of work” in A2A, progressing through a defined lifecycle (e.g., submitted, working, completed).

- Messages and Parts: Communication within tasks occurs via Messages composed of various Part types like TextPart (plain text), FilePart(binary data), and DataPart (structured JSON data), which supports its goal of multi-modal communication.

However, the “Secure by Default” principle, while a sound principle, presents practical challenges. The comprehensive security measures outlined—mitigating Agent Card spoofing, requiring mutual TLS, robust JWT validation, and per-skill permissions—are theoretically sound.

Yet, this level of security sophistication translates into a significant implementation burden. If secure A2A deployment proves overly complex, potential outcomes include developers opting for simpler, less secure alternatives or A2A being deployed with security configurations that fall short of the ideal.

The efficacy of A2A’s security model in the wild will heavily depend on the robustness and usability of SDKs like Google’s Agent Developer Kit (ADK) and the clarity of its security implementation guidelines.

B is for Benchmarking: Agent2Agent in a Noisy Agent Protocol Field

A2A is one of several protocols in a developing field, with various approaches to agent communication.

AGNTCY (pronounced “agency”), is an open-source effort backed by companies including Cisco, LangChain, and LlamaIndex. AGNTCY aims to create a universal language and infrastructure for AI agents to discover, communicate, and collaborate across different platforms, envisioning an “Internet of Agents”.

Its technical foundation includes the Open Agent Schema Framework (OASF), a standardized data model for describing agent capabilities and metrics to facilitate discovery and evaluation, and AGNTCY’s Agent Connect Protocol (ACP), which defines a framework for network-based agent communication, covering aspects like authentication, configuration, and error handling. AGNTCY’s development roadmap includes phases for discovery, workflow integration, secure operation, and monitoring.

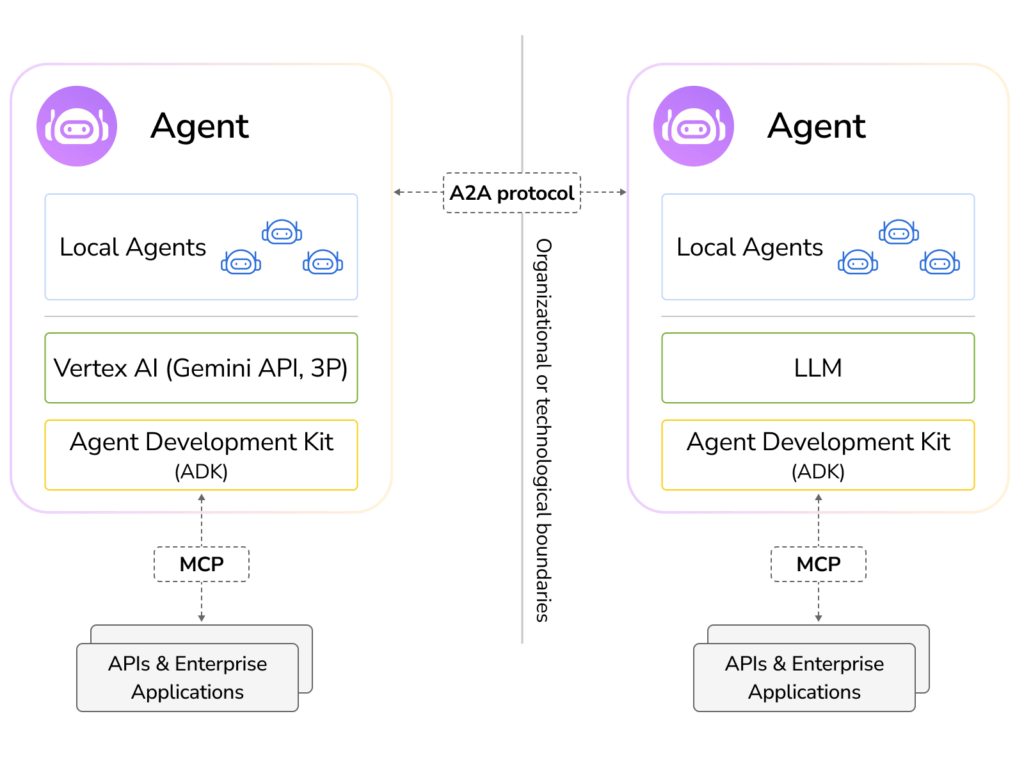

Anthropic’s Model Context Protocol (MCP), on the other hand, is primarily engineered for standardizing how LLM-based agents interface with external tools, APIs, and data sources. If A2A targets agent-to-agent collaboration, MCP focuses on agent-to-resource invocation, ensuring, for instance, that a data analysis agent can seamlessly invoke the right APIs or fetch data from an enterprise system. They address distinct, yet critically complementary, layers of the agent interaction stack. As one analysis notes, “A2A standardizes between agent communication while MCP standardizes agent-to-tools communication”.

Other protocols are also gaining attention. The Agent Communication Protocol (ACP), backed by IBM Research and the Linux Foundation’s BeeAI community, also aims for agent-to-agent collaboration using RESTful APIs and multimodal messages. The Agent Network Protocol (ANP) aims for a more inherently decentralized model, leveraging Decentralized Identifiers (DIDs) and aiming for a peer-to-peer architecture to become the “HTTP of the agent internet era”.

Another initiative, FIPA Agent Communication Language (FIPA ACL), a pioneering effort, grounds agent communication in speech act theory and agent mental states. While theoretically rich, FIPA ACL’s semantic complexity and its association with platforms like JADE may have influenced its broader adoption within the contemporary, web-centric development paradigm. A2A’s reliance on existing web standards appears more aligned with current developer practices.

This protocol diversity highlights different architectural considerations. A2A’s current HTTP-based, client-server model (though agents can act as both) bets on familiar patterns. ANP aims for a more inherently decentralized model.

Furthermore, arguments exist for event-driven architectures as a foundational layer for agent communication. The choices embedded in these protocols—regarding transport, discovery, and interaction patterns—will influence the future agent internet. It’s not merely about message content, but about the structure, scalability, and resilience of the agent network itself.

C is for Critique: An Assessment of Agent2Agent's Promise

A2A’s potential for widespread adoption depends on addressing several challenges.

Potential Strengths:

- Agentic Collaboration Focus: Its design prioritizes peer-to-peer agent autonomy.

- Standard Web Technologies: Utilizes HTTP, JSON-RPC, and SSE, potentially easing initial adoption.

- Emphasis on Enterprise Security: Security considerations are integral to the protocol’s design.

- Long-Running Task Support: Accommodates complex, asynchronous workflows.

- Multi-Modal Design: Forward-looking support for diverse content types.

- Industry Collaboration: Developed with input from numerous industry partners, suggesting broad interest.

Important Concerns and Unresolved Questions:

- The Point-to-Point Scalability Dilemma: A key consideration is that the A2A model, as currently described, largely relies on direct, point-to-point connections between agents. This architectural choice could lead to complexities similar to those seen in some early microservice deployments. In this sense, “A2A takes a big step forward… But the way agents communicate still follows a familiar pattern: direct, point-to-point connections”. Another concern is “brittle integrations” and “operational overhead”. Without a mechanism to decouple agents, the N-squared integration problem is a potential issue.

- The Imperative for an Event-Driven Architecture: Related to the point-to-point issue, there is a line of thought that A2A could benefit from or a comparable “event mesh”. An asynchronous, decoupled communication backbone would enable agents to “publish what they know and subscribe to what they need”, fostering loose coupling, enhancing fault tolerance, ensuring durable communication, and facilitating scalable real-time event flows. For complex, enterprise AI agents deployments, this could be an important factor.

- Adoption and Implementation Realities: While leveraging web standards is helpful, constructing robust and secure A2A agents is an engineering task that requires care. The detailed security requirements could present challenges if not supported by high-quality SDKs and clear best practices, potentially becoming a barrier to consistent, secure implementation across a diverse developer ecosystem.

- Agent Card Governance and Discovery at Scale: Agent Cards are an effective discovery mechanism, but their governance at scale requires careful consideration. Mechanisms will be needed to prevent malicious or outdated entries. Robust governance models, potentially involving trusted registries, will be important.

A2A’s task management is designed to make task execution and tracking between agents efficient, whether tasks are done immediately or take longer to complete. The protocol emphasizes clear communication throughout the task lifecycle for success.

The point-to-point limitation may be addressable if the A2A ecosystem evolves to provide effective abstractions—for instance, through SDKs that transparently integrate event-driven patterns or via managed A2A services that abstract away communication complexities.

The official “A2A Python SDK” and similar tools will be pivotal. A protocol’s success often depends on its specification, developer experience, and the practicality of building scalable, secure systems.

In the A2A architecture, agents work together by collaborating on shared tasks, using their capabilities to achieve common goals across industries like Healthcare, Supply Chain, and Finance. Agent interactions require the ability to communicate context so all parties stay clear and aligned during collaboration. The protocol also manages the agent’s response and follow-ups to keep communication and continuity throughout the workflow.

Agent2Agent Protocol Components: Under the Hood

At the heart of the A2A protocol are thoughtfully designed components to enable agent collaboration and robust agent communication across different environments. The protocol is built on 5 key principles: open standard, secure by default, multi-modal, long running tasks, and agent collaboration. These principles mean agents can not only communicate but also collaborate on complex tasks regardless of their origin or technology.

A key part of the A2A protocol is the Agent Card—a digital profile that is an agent’s discoverable identity. Like a business card, the Agent Card advertises an agent’s capabilities, supported data types and authentication requirements so other agents can discover and evaluate the best agent for a task. This is crucial for agents to find and connect with each other in a standard and secure way.

The A2A Server is the secure gateway for agent interactions, handling incoming task requests, managing the task lifecycle, and enforcing enterprise grade authentication and authorisation. This means only authorised agents can initiate or participate in collaborative workflows, and the system remains secure. On the other side the A2A Client is responsible for discovering other agents, initiating communication and selecting the best agent for collaboration based on advertised capabilities.

A2A is modality agnostic so agents can exchange a wide variety of data types—not just text but also images, audio and structured data—enabling rich multi-modal interactions. The protocol uses JSON-RPC for structured messaging and Server-Sent Events (SSE) to provide real time feedback and status updates during task execution. This is particularly useful for long-running tasks as agents can share progress, intermediate results and final outcomes in a way that supports complex asynchronous workflows.

By following these 5 principles and using robust technical components, the A2A protocol allows agents to collaborate seamlessly, exchange information securely and tackle complex multi-step tasks. The open standard and enterprise grade security make it a great foundation for building interoperable multiple agents that can scale across organisational boundaries and different application domains.

The Integration Imperative: MCP + A2A

The potential synergy between A2A and the model context protocol (MCP) is a notable aspect of the current agent protocol landscape. These protocols are not mutually exclusive; they address complementary, yet distinct, facets of agent functionality.

MCP provides vertical integration (application-to-model), while A2A provides horizontal integration (agent-to-agent)”. MCP enables AI agents to interact “downwards” with its operational environment—tools, databases, APIs. A2A allows agents to communicate “laterally” with peers. An analogy suggests that MCP equips an agent with its individual toolkit and knowledge access, while A2A enables agents to function as part of a collaborative team. Both are essential for sophisticated agent-based systems.

| Feature | Google’s A2A | Anthropic’s MCP |

| Primary Goal | Agent-to-agent collaboration & task delegation | Standardize agent interaction with tools & data sources |

| Key Components | Agent Cards, Tasks, Messages, Parts | Tools, Resources, Prompts (JSON-RPC messages) |

| Integration | Horizontal (Agent ↔ Agent) | Vertical (Agent ↔ Tool/Data) |

| Analogy | Agents forming a project team | Agent utilizing its individual toolkit |

Consider an IT support workflow: An IT Service Desk Agent (A2A-enabled) receives a multifaceted ticket from a user reporting “slow application performance.”

- The Service Desk Agent uses A2A to delegate a sub-task to a Device Diagnostics Agent.

- The Device Diagnostics Agent employs MCP to interface with endpoint management tools (like an MDM or system monitoring service) to check the user’s device health (CPU, memory, disk I/O).

- Concurrently, the Service Desk Agent tasks a Network Analyst Agent (via A2A).

- The Network Analyst Agent uses MCP to query network monitoring tools (like Datadog or a packet analyzer) to check for latency or packet loss on the user’s connection.

- The specialized agents return their findings (a device health report, a network status summary) as A2A artifacts to the Service Desk Agent.

- The Service Desk Agent synthesizes this information to determine the root cause and provide a solution.

In such scenarios, this IT Service Desk Agent acts as an “Orchestrator.” It decomposes the high-level problem (“slow application”), delegates diagnostic tasks via A2A, and aggregates the results. Meanwhile, the specialized agents leverage MCP servers for their tool interactions. The robustness of these orchestrators can influence the viability of complex, automated IT support systems. However, managing the interplay of two distinct protocols and ensuring seamless handoffs presents engineering considerations.

A2A Protocol FAQs

What is the A2A protocol?

What is the difference between A2A and MCP?

In short:

MCP = model-to-tools, while A2A = agent-to-agent collaboration.

MCP enriches an individual agent, whereas A2A enables multi-agent systems

What is A2A authentication?

Through A2A authentication, each agent proves its identity before tasks, workflows, or sensitive data are shared, enabling secure multi-agent automation at scale.