What is HIPAA Compliant AI?

HIPAA compliant AI refers to artificial intelligence systems specifically architected for the healthcare sector that adhere to the strict standards of the HIPAA Privacy Rule, Security Rule, and Breach Notification Rule.

For an AI system to be truly compliant, it must do more than just “not save data.” It must be built on a Zero-Trust Architecture that ensures the confidentiality, integrity, and availability of electronic Protected Health Information (ePHI).

Key technical characteristics include:

- Zero-Data Retention: The system processes data to generate an answer or perform an action but does not store the raw input data for model training.

- End-to-End Encryption: Data is encrypted at rest (using AES-256 standards) and in transit (using TLS 1.2 or higher).

- Business Associate Agreement (BAA): The vendor must legally sign a BAA, accepting liability for safeguarding the data they process.

- De-Identification: Advanced systems automatically redact or tokenize Personally Identifiable Information (PII) before it reaches the Large Language Model (LLM).

HIPAA Compliant AI in 2026

As we move through 2026, nearly 90% of healthcare leaders identify AI as critical for improving patient access, streamlining operational efficiency, and reducing clinician burnout. Yet, despite this urgency, adoption often stalls at the pilot stage. The barrier isn’t technology; it’s trust in AI.

The Health Insurance Portability and Accountability Act (HIPAA) remains the single biggest hurdle preventing healthcare organizations from moving AI initiatives into production. The stakes are incredibly high: a single data breach can result in massive federal fines, loss of patient trust, and irreparable reputational damage. In the context of AI, where data is the fuel, ensuring compliance is more complex than ever.

However, the technology landscape is shifting. We are moving beyond the era of passive, generative AI (GenAI) toward Agentic AI systems. These are customizable virtual agents designed not just to talk, but to act automating complex workflows like appointment scheduling, billing, and triage within strict regulatory guardrails.

In this comprehensive guide, we review the 7 best HIPAA-compliant AI tools and agents for 2025. We will also break down the specific architectural requirements for compliance, the difference between Generative and Agentic AI, and how to evaluate vendors to ensure your Protected Health Information (PHI) remains secure.

Why "Agentic AI" is Safer than GenAI

Standard Generative AI is probabilistic it predicts the next word in a sentence. While powerful, this open-ended nature creates risks of “hallucinations” (fabricating facts) and data leakage. Agentic AI takes a different approach. Instead of relying on open-ended text generation, agentic systems are designed around deterministic, policy-driven workflows.

- Separation of Action and Generation: The AI agents might use an LLM to understand the user’s intent (e.g., “I need a refill”), but the action (checking the prescription database) is performed by a secure, deterministic script.

- Auditability: Every step an agent takes is logged. Unlike a black-box chatbot, an agentic workflow leaves a clear audit trail showing exactly which database was accessed and why.

- Guardrails: Agentic AI operates within strict boundaries. It cannot “improvise” medical advice; it can only execute pre-approved workflows defined by the healthcare provider.

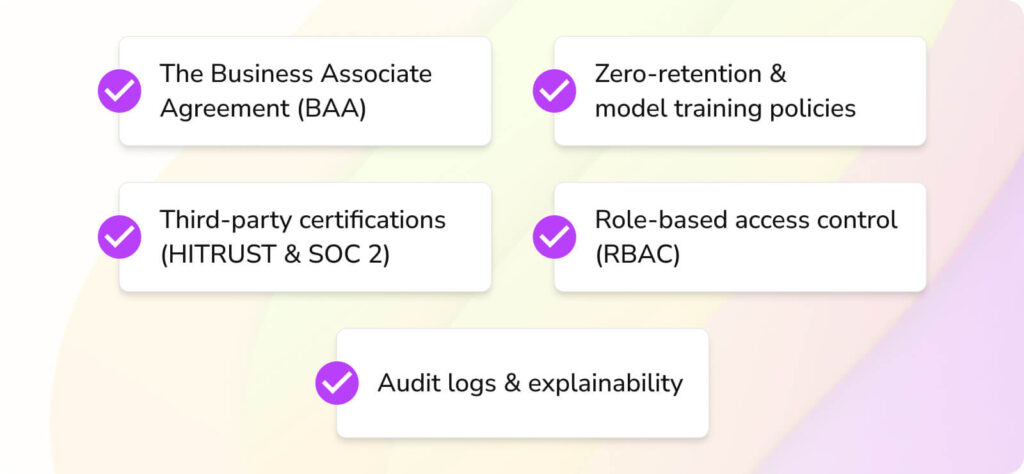

The Evaluation Framework: How to Choose Safe AI

Before looking at specific tools, healthcare buyers must understand how to evaluate them. Your organization acts as the “Covered Entity,” and you are responsible for vetting your “Business Associates” (vendors). Use these five pillars to judge any AI tool.

1. The Business Associate Agreement (BAA)

This is non-negotiable. If a vendor refuses to sign a BAA, the conversation ends. The BAA is a legally binding contract that specifies the vendor’s responsibilities regarding PHI. It must cover:

- Permitted Uses: Clearly stating the vendor cannot use your patient data to train their public models.

- Breach Notification: The vendor must notify you within a specific timeframe (usually 60 days or less) if a breach occurs.

- Subcontractors: Ensuring the vendor’s downstream partners (e.g., the cloud host they use) are also compliant.

2. Zero-Retention & Model Training Policies

The biggest risk in 2025 is “Model Collapse” or data leakage via training. You must verify that the vendor operates a segregated instance.

- Ask: “Does my data leave my dedicated instance?”

- Ask: “Is my data used to fine-tune your global base model?”

The answer to both should be No. Your data should only be used to train your specific instance (local fine-tuning) or not stored at all.

3. Third-Party Certifications (HITRUST & SOC 2)

Self-attestation is not enough. Look for third-party validation:

- SOC 2 Type II: Proves the vendor has sustained security controls over a period of time (usually 6 -12 months), not just at a single point in time.

- HITRUST CSF: The “gold standard” for healthcare security. It aggregates HIPAA, NIST, and ISO requirements into a rigorous certification framework.

4. Role-Based Access Control (RBAC)

HIPAA’s “Minimum Necessary” rule states that staff should only access data required for their job. A compliant AI tool must support granular RBAC. For example, a scheduling bot should see calendar availability but not clinical diagnosis notes. The system must enforce these permissions automatically.

5. Audit Logs & Explainability

If an AI agent denies a claim or prioritizes a patient, you must be able to explain why. Compliant tools provide immutable logs of all interactions. This is critical for post-incident forensics and for passing OCR (Office for Civil Rights) audits.

Top 7 HIPAA-Compliant AI Tools & Agents (Ranked)

We have ranked these solutions based on their security architecture, feature depth, and readiness for enterprise deployment.

Quick Comparison Table

| Rank | Tool | Best Use Case | Compliance Highlights |

| 1 | Aisera | Agentic Workflow Automation | Zero-Retention, SOC 2 Type II, BAA |

| 2 | Microsoft Azure AI | Clinical Documentation | HITRUST, Azure BAA, ISO 27001 |

| 3 | OpenAI (Enterprise) | Knowledge Management | SOC 2, Enterprise BAA, Data Exclusion |

| 4 | Google Vertex AI | Custom App Development | FedRAMP High, BAA, FHIR Support |

| 5 | Corti | Emergency Triage (Voice) | Real-time Redaction, GDPR/HIPAA |

| 6 | Hyro | Digital Front Door | ISO 27001, HITRUST alignment |

| 7 | Ambience / Nabla | Ambient Scribing | End-to-End Encryption, BAA |

1. Aisera

Best For: Enterprise-grade Agentic AI & Service Automation.

Aisera stands out as a purpose-built AI Service Management (AISM) platform. Unlike generic LLM wrappers, Aisera provides a comprehensive “AI Agent” layer that sits between the user and your backend systems (EHR, ITSM). It is designed to resolve requests end-to-end – such as “Schedule an appointment for Dr. Smith” or “Reset my portal password” – without human intervention.

Why it’s a top pick:

Aisera utilizes a proprietary TRIPS (Trusted Risk-Intelligence Pre-trained System) architecture. It doesn’t just generate text; it classifies intent and executes deterministic workflows. This reduces the “hallucination” risk significantly.

- Security: Offers a zero-retention architecture where customer data is isolated. It holds SOC 2 Type II certification and provides a comprehensive BAA.

- Agentic Capabilities: Can autonomously update patient records, process referral documents, and handle billing inquiries by integrating deeply with Epic, Cerner, and Salesforce.

2. Microsoft Azure AI / Nuance

Best For: Clinical documentation and ambient listening.

Microsoft’s acquisition of Nuance has created a powerhouse in clinical AI. The Nuance DAX (Dragon Ambient eXperience) Copilot uses ambient voice technology to listen to patient-doctor conversations and automatically draft clinical notes in the EHR. This runs on the Azure cloud, which is widely regarded as one of the most secure infrastructures in the world.

Why it’s a top pick:

It directly addresses physician burnout (“pajama time”). Because it runs on Azure, it inherits the massive compliance framework of Microsoft.

- Security: HITRUST CSF certified. Azure allows for specific geographic data residency (keeping data in the US).

- Integration: Deep native integration with Epic and other major EHRs creates a seamless, secure data flow.

3. OpenAI (ChatGPT Enterprise)

Best For: Internal knowledge search, summarization, and drafting.

While the free version of ChatGPT is a major compliance risk (see below), ChatGPT Enterprise is a different beast entirely. It was built to satisfy corporate legal teams. It allows healthcare organizations to deploy a secure workspace where staff can use GPT-4 to summarize medical journals, draft policy documents, or translate patient instructions.

Why it’s a top pick:

It offers the raw power of the world’s best LLM with the legal protections required by healthcare.

- Security: Data encryption at rest (AES-256) and in transit (TLS 1.2+).

- Data Promise: OpenAI explicitly guarantees that data submitted via the Enterprise API or workspace is not used to train their foundational models.

- SSO: Supports Single Sign-On (SSO) for managing staff access securely.

4. Google Cloud Healthcare API / Vertex AI

Best For: Developers and Engineering teams building custom apps.

For large hospital systems or health-tech startups building their own AI applications, Google Cloud is the standard. Vertex AI Search for Healthcare allows organizations to build search engines on top of their own clinical data. It natively supports FHIR (Fast Healthcare Interoperability Resources) and HL7 standards, making interoperability easier.

Why it’s a top pick:

It is infrastructure-first. You aren’t buying a chatbot; you are buying the secure building blocks to create one.

- Security: Google will sign a BAA covering a vast array of its cloud services. Data is hosted in a FedRAMP High environment (suitable for government data).

- Features: Specialized models like MedLM are fine-tuned specifically for medical domains.

5. Corti

Best For: Emergency dispatch, 911, and high-stakes telehealth.

Corti acts as an AI “copilot” for emergency consultations. It listens to the audio of a call in real-time and detects patterns – such as the sound of agonal breathing (a sign of cardiac arrest) – alerting the dispatcher to upgrade the response urgency.

Why it’s a top pick:

It operates in high-pressure environments where accuracy is life-or-death.

- Security: Corti is GDPR and HIPAA compliant. It uses rigorous data redaction techniques to ensure that audio training data is stripped of PII.

- Speed: Low-latency processing ensures insights are delivered instantly during the call.

6. Hyro

Best For: “Digital Front Door” call deflection and website chat.

Hyro specializes in Adaptive Communications. It is widely used to replace rigid “press 1 for billing” IVR systems with conversational AI. Hyro focuses heavily on the patient access side: finding doctors, scheduling appointments, and answering FAQs.

Why it’s a top pick:

Hyro is “plug-and-play” compared to heavy platform builds. It scrapes your website and provider directory to create a knowledge base automatically.

- Security: ISO 27001 certified and HIPAA compliant. They emphasize “Explanation” capabilities, allowing admins to see exactly why the AI gave a specific answer.

7. Ambience Healthcare / Nabla

Best For: Automated scribing (Alternative to Nuance).

Tools like Ambience and Nabla represent the agile startup wave of “AI Scribes.” They are often faster to deploy than Nuance and offer highly specialized specialty-specific models (e.g., scribes tuned specifically for Oncology or Psychiatry).

Why it’s a top pick:

These tools capture the “patient story” efficiently, often generating referral letters and patient instructions alongside the clinical note.

- Security: Both vendors sign BAAs and utilize strict data encryption protocols. They focus on ephemeral processing where data is processed and then pushed to the EHR, minimizing retention risk.

The "Public Trap": Risks of Shadow AI

The rise of accessible AI has created a phenomenon known as “Shadow AI” where staff use non-approved tools to do their work.

Do NOT use the free version of ChatGPT for PHI.

Standard, consumer-grade AI tools (like the free ChatGPT, Claude, or Gemini) generally retain user inputs to retrain and improve their models.

- The Risk: If a doctor pastes a patient note saying, “Patient John Doe, DOB 01/01/80, has Condition X,” that data becomes part of the AI’s permanent memory. It could potentially be regurgitated to another user in the future.

- The Penalty: This constitutes a willful disclosure of PHI. Penalties can reach $50,000 per violation, with an annual cap of $1.5 million for identical violations.

Mitigation: Implement network-level blocking of public AI sites and provide staff with a sanctioned, HIPAA-compliant alternative (like the tools listed above) to remove the temptation.

The Shared Responsibility Model

One of the most misunderstood aspects of AI compliance is the Shared Responsibility Model. Just because you buy a HIPAA-compliant tool does not mean you are automatically compliant.

- Vendor Responsibility (Security OF the Cloud):

The AI provider is responsible for the physical security of the servers, the encryption of the code, network security, and the underlying AI model safety. They must ensure the infrastructure is impenetrable. - Customer Responsibility (Security IN the Cloud):

- Access Management: Ensuring only the right staff have logins. If a doctor leaves and you don’t revoke their AI access, that is your breach, not the vendor’s.

- Input Data: Ensuring you have patient consent to use AI tools (if required by your Notice of Privacy Practices).

- Configuration: Setting up the tool correctly (e.g., enabling Multi-Factor Authentication).

Compliance is a partnership. A BAA protects you legally, but proper configuration protects you practically.

Conclusion: The Future is Autonomous

As we approach 2026, the healthcare industry is moving toward a model of Secure Autonomy. The initial fear of AI is being replaced by a pragmatic understanding of the guardrails needed to control it.

The winners in this space will not be the organizations that avoid AI to escape risk, but those that adopt HIPAA-compliant Agentic AI. By leveraging agents that can securely schedule, document, and analyze within a protected framework, healthcare providers can finally break the “Iron Triangle” of healthcare – simultaneously improving access, quality, and cost – without compromising the sacred trust of patient privacy.

When selecting your partner, look beyond the marketing hype. Demand the BAA. Verify the SOC 2 report. And choose a platform like Aisera that understands that in healthcare, the most important feature of AI isn’t how smart it is – it’s how safe it is.