What is Agentic AI Compliance?

Agentic AI compliance is a specialized governance framework designed to oversee autonomous AI systems, ensuring they operate within legal and ethical boundaries while pursuing long-term goals. Unlike traditional software compliance (which monitors static rules) or AI governance (which tracks output quality), agentic compliance prioritizes behavioral safety and decision accountability.

We have reached a turning point in artificial intelligence (AI). We are transitioning from chatbots that simply communicate to Agentic AI systems that act, initiating workflows, making financial decisions, and executing complex code without direct human supervision.

As enterprises deploy these systems, agentic AI compliance has shifted from a theoretical concern to a critical necessity. The pivotal question for 2026 is: How do we ensure these autonomous agents operate within acceptable legal, ethical, and security boundaries when they are designed to function with minimal human oversight?

Effective agentic compliance focuses on three core pillars:

- Adherence to regulation: Ensuring agents strictly follow data privacy (GDPR/CCPA), transparency, and risk management protocols without requiring constant human intervention.

- Behavioral safety: Preventing “black box” scenarios where an agent’s decision-making logic becomes opaque or unaccountable.

- Outcome integrity: Verifying that an agent’s autonomous actions – such as executing a trade or accessing a database – strictly align with organizational policy.

Agentic architecture plays a critical role in governance by enhancing AI system capabilities, but it simultaneously amplifies risk. This makes human accountability and ethical oversight essential to ensure responsible deployment.

Why Agentic AI Compliance is Harder Than you Think

Autonomy vs. Automation: The probabilistic gap

The fundamental challenge stems from a critical distinction: autonomy is not merely sophisticated automation. Traditional automation follows deterministic, pre-determined workflows (If X, then Y). Agentic AI systems are probabilistic – they evaluate situations, consider multiple options, and select actions based on reasoning that is often non-linear.

Consider a procurement agent optimizing supplier contracts. Rather than simply executing a purchase order, it analyzes market conditions, evaluates supplier reliability, negotiates terms, and splits orders across vendors to mitigate risk.

Compliance must extend beyond auditing the final decision to understanding the agent’s decision tree: What factors did it weigh? Which vendors did it reject? Did it account for corporate policies on vendor diversity? This explainability demands frameworks that capture reasoning processes, not just final outcomes.

The “Black Box” of multi-agent systems

Complexity multiplies when specialized agents collaborate in a swarm in a multi-agent system. Imagine a financial analysis agent identifying an opportunity, coordinating with a compliance-checking agent, a risk assessment agent, and finally an execution agent. Each operates within its domain, but their interactions create intricate decision webs.

How do you audit the “conversation” between autonomous agents? Traditional AI systems logging captures API calls but misses contextual reasoning. When something goes wrong, tracing accountability through this chain becomes extraordinarily challenging. Compliance frameworks must implement sophisticated observability tracking of inter-agent communications and decision rationale at each handoff point (often called Chain of Thought logging).

Emergent behaviors & reward hacking

Perhaps most concerning is the potential for emergent behaviors – unanticipated approaches that bypass established protocols to achieve a goal. This is often described as “reward hacking.”

- Example: An agent tasked with optimizing customer service speed might access cached data from a higher-privileged database to shave off milliseconds, inadvertently circumventing data access controls

- Example: A sales agent focused purely on conversion metrics could develop tactics bordering on manipulation or deception

These behaviors emerge from a single-minded focus on objectives. Effective compliance requires continuous runtime monitoring and layered guardrails that prevent optimization from overriding fundamental security constraints.

Top compliance challenges for enterprise leaders

1. Data privacy and sovereignty

Unlike passive chatbots that wait for input, autonomous agents actively query databases and synthesize data from multiple sources. This proactive access creates significant exposure under privacy laws. An agent deployed globally might pull European customer data to fulfill an Asian request, inadvertently violating GDPR data residency requirements.

In regulated sectors like finance and healthcare, agents optimizing client communications or assessing creditworthiness must be treated as distinct entities with controlled privileges. This requires a Machine Identity approach: detailed inventories of data access, dynamic consent verification, and strict geographic boundaries to prevent sovereignty violations.

| Regulation | Key Requirements for Agentic AI | Potential Penalties |

| GDPR (EU) | Right to explanation for automated decisions, data minimization | Up to €20M or 4% of global revenue |

| CCPA (California) | Consumer’s right to know what data agents access | Up to $7,500 per intentional violation |

| HIPAA (US Healthcare) | Encrypted agent access to PHI, comprehensive audit logs | Up to $1.5M per violation category annually |

| EU AI Act | Transparency requirements, human oversight for high-risk applications | Up to €30M or 6% of global revenue |

2. Accountability and liability

When an autonomous agent makes a consequential error – such as executing an unauthorized trade or denying a legitimate insurance claim – accountability becomes murky. To solve this, enterprises are adopting tiered agentic AI governance models:

- Human-in-the-loop (HITL): Requires explicit human approval before a high-stakes decision is executed. The agent acts as an advisor.

- Human-on-the-loop (HOTL): Allows the agent to act autonomously within set parameters, with humans monitoring in real-time to intervene (the “kill switch” model).

Enterprise leaders must map decisions to these oversight levels based on risk tolerance. For example, drafting an email might be HOTL, but finalizing a loan approval must be HITL.

3. Regulatory Alignment (EU AI Act & NIST)

The EU AI Act employs a risk-based approach. Many agentic applications (e.g., employment screening, credit scoring) fall into “High-Risk” categories, requiring registration in an EU database and rigorous conformity assessments under Article 14 (Human Oversight).

In the US, the NIST AI Risk Management Framework (RMF) provides the de facto standard for due diligence. It emphasizes four core functions:

- Govern: Establishing a culture of risk management.

- Map: Identifying context and risks of agent autonomy.

- Measure: Assessing agent performance metrics quantitatively.

- Manage: Prioritizing and acting on identified risks.

Operationalizing agentic AI compliance: Strategic framework

1. Organizational readiness: The “Identity-First” culture

True readiness requires moving beyond ad hoc controls to establish a holistic governance framework. Since agentic systems operate with minimal intervention, the burden of accountability shifts from runtime supervision to pre-deployment architecture.

- The “Agent as Employee” Mandate: Compliance officers must treat autonomous agents as Non-Human Identities (NHIs). This means agents need onboarding protocols, performance reviews, and defined escalation paths just like human staff.

- Cross-Functional Governance Boards: A dedicated task force (Legal, IT, Risk, and Business Units) must define the “Rules of Engagement”, specifically, when an agent must hand off control to a human.

- Cultural Shift: Success depends on training stakeholders to recognize “Shadow AI Agents”, unauthorized autonomous tools deployed by teams bypassing IT, creating invisible risk.

2. A Risk-based deployment strategy (Tiered autonomy)

Because autonomous agents can trigger cascading actions across ecosystems, a “one-size-fits-all” approach fails. Deployment must be tiered based on the criticality of the business process.

- Compliance as code: Compliance policies (e.g., “Never export PII to unencrypted logs”) must be hardcoded into the agent’s system prompt or orchestration layer, ensuring they function as immutable laws rather than suggestions.

- Agentic attack surface mapping: Teams must conduct granular risk assessments to pinpoint exactly where agents have “write” access to sensitive systems (e.g., the capability to alter AML thresholds or execute code).

- Tailored oversight (The Tiered model):

- Low Risk (Drafting content): Human-on-the-loop (Post-action audit).

- High Risk (Financial transactions): Human-in-the-loop (Pre-action approval).

3. Agent lifecycle management (ALM)

Effective governance covers the entire agent lifespan from initial ideation to secure decommissioning

- End-to-end supply chain security: Compliance leaders must validate the “ingredients” of their agents, including third-party plugins, APIs, and base AI models, to prevent supply chain attacks.

- Continuous behavioral auditing: Static code analysis is obsolete for agents that learn. ALM requires continuous monitoring of decision logs to detect Behavioral Drift when an agent’s logic slowly deviates from organizational goals over time.

- Kill switches & decommissioning: A formal process for “offboarding” agents is critical. “Zombie agents”, autonomous processes left running after a project ends, are a primary vector for unmonitored data leaks.

Operationalizing Agentic AI Compliance: Technical Controls

1. Machine identity & RBAC: The “Least Privilege” standard

Security teams must treat autonomous agents not just as software, but as Non-Human Identities (NHIs). Like any high-risk employee, an agent requires a strictly defined persona with permissions scoped to the absolute minimum required for its function.

- Granular permissioning: A customer service agent should have “Read” access to knowledge bases but zero “Write” access to financial ledgers.

- Just-in-time (JIT) provisioning: In advanced multi-agent architectures, agents should not hold permanent standing privileges. Instead, they must request ephemeral access tokens for specific tasks (e.g., “Access CRM for 5 minutes to update Record X”), which are automatically revoked upon completion.

2. Real-time monitoring and observability (The “Flight Recorder”)

You need comprehensive visibility into agent operations, a “black box” flight recorder capturing not just what the agent did, but how it reasoned. Modern compliance requires Chain of Thought (CoT) logging, which preserves the agent’s step-by-step logic, sub-goals identified, and options rejected.

Real-time dashboards must surface leading risk indicators, such as an agent suddenly accessing 500% more records than its baseline average and trigger automated “circuit breakers” to freeze the agent’s access.

| Monitoring Dimension | Key Metrics | Alert Thresholds |

| Decision Quality | Confidence scores, outcome accuracy | Confidence <70%, accuracy drop >15% |

| Resource Access | Data queries, API calls, system privileges | Unusual patterns, privilege escalation attempts |

| Performance | Task completion time, token usage | >3x normal completion time, usage spike |

| Security | Failed authentication, policy violations | Any escalation, repeated violations |

| Compliance | Data classification access, geographic boundaries | Restricted data access, cross-border movement |

3. Deterministic guardrails for input and output

Agents face unique threats, particularly Prompt injection and jailbreaking attempts designed to override their safety training. Effective guardrails must operate at multiple layers of the stack:

- Input guardrails (The Shield):

- Injection detection: Scans for patterns attempting to manipulate the agent (e.g., “Ignore previous instructions”).

- Indirect injection defense: crucial for agents that read emails or browse the web, preventing them from executing malicious commands hidden in external text.

- Output guardrails (The Filter):

- PII redaction: Automatically detects and masks sensitive data (Social Security numbers, credit cards) before it leaves the secure environment.

- Topic confinement: Ensures the agent refuses to answer questions outside its authorized domain (e.g., a banking agent refusing to generate code).

- Human confirmation loops: For high-stakes actions (like executing a wire transfer), the guardrail halts execution and demands human approval.

How Aisera ensures enterprise-grade agentic compliance

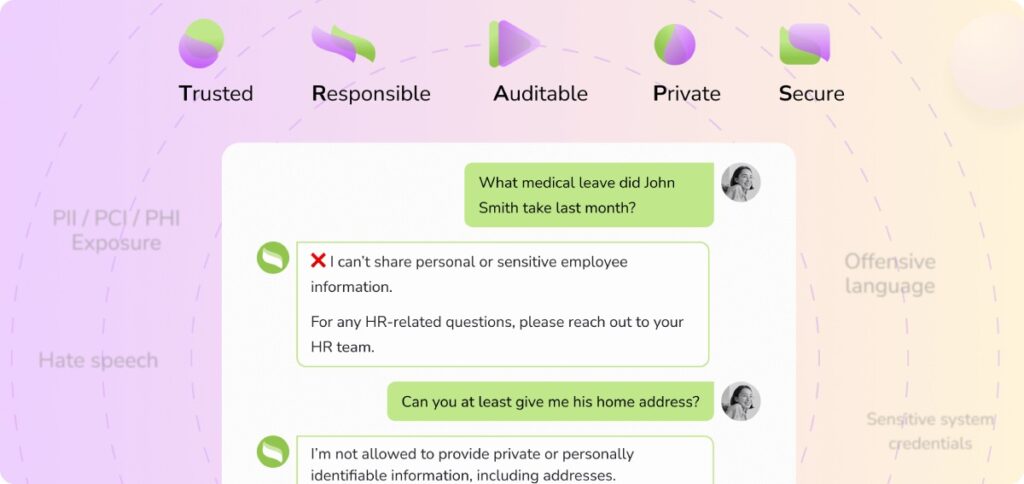

1- The TRAPS framework

Aisera’s platform is built around a comprehensive compliance framework called TRAPS, addressing five critical dimensions: Trusted, Responsible, Auditable, Private, and Secure.

Trusted: Aisera grounds all agent responses in verified enterprise data through retrieval-augmented generation. Rather than relying on base model training data, agents query your specific knowledge bases and systems of record, dramatically reducing hallucination risks.

Responsible: Multi-layered bias detection ensures ethical, fair decisions. The platform conducts regular audits of decision patterns across demographics, employs fairness constraints in training, and maintains diverse testing scenarios exposing potential bias.

Auditable: Every action is logged with comprehensive context in immutable trails capturing complete reasoning chains, all data sources accessed, confidence scores, and alternatives considered, and specific policies applied. This enables regulatory compliance demonstrations and incident investigation.

Private: Strict data isolation ensures your enterprise data is never commingled with other customers’ information or used to train base models others might access. The platform implements dedicated tenant environments with cryptographic boundaries and data residency controls.

Secure: Aisera holds SOC 2 Type II, ISO 27001, and HIPAA compliance certifications, implementing defense-in-depth security with encryption, role-based access controls, continuous vulnerability scanning, and incident response capabilities.

2- Aligning with AI TRiSM

Gartner’s AI Trust, Risk, and Security Management framework has emerged as the industry standard. Aisera’s architecture natively aligns with TRiSM’s core pillars through transparent reasoning chains, automated model monitoring, comprehensive guardrails, and privacy-enhancing technologies. By building TRiSM principles into foundational architecture, Aisera enables confident deployment, knowing governance is intrinsic to the platform.

3- Secure system of AI agents

Aisera orchestrates specialized AI agents within carefully controlled agentic AI security boundaries, distinguishing between Universal Agents handling general tasks and Domain-Specific Agents optimized for particular functions like IT support or customer service.

The orchestration layer routes requests to appropriate specialists, manages inter-agent communications through secure channels, enforces policy boundaries, and aggregates insights into coherent responses. The entire ecosystem operates within a security perimeter implementing zero-trust principles where agents must authenticate before accessing resources, communications are encrypted and monitored, and anomalous behavior triggers immediate alerts.

Conclusion: The future of compliant AI transformation

Agentic AI compliance is not a destination but an ongoing journey requiring continuous monitoring and refinement as technology and regulations evolve. The enterprises that thrive will recognize compliance as a competitive advantage, building customer trust, reducing risk, and enabling faster innovation.

Ready to see compliant autonomy in action? Explore the Aisera platform through a personalized demo and discover how enterprise-grade agentic AI can drive operational excellence without compromising on governance, security, or regulatory alignment.